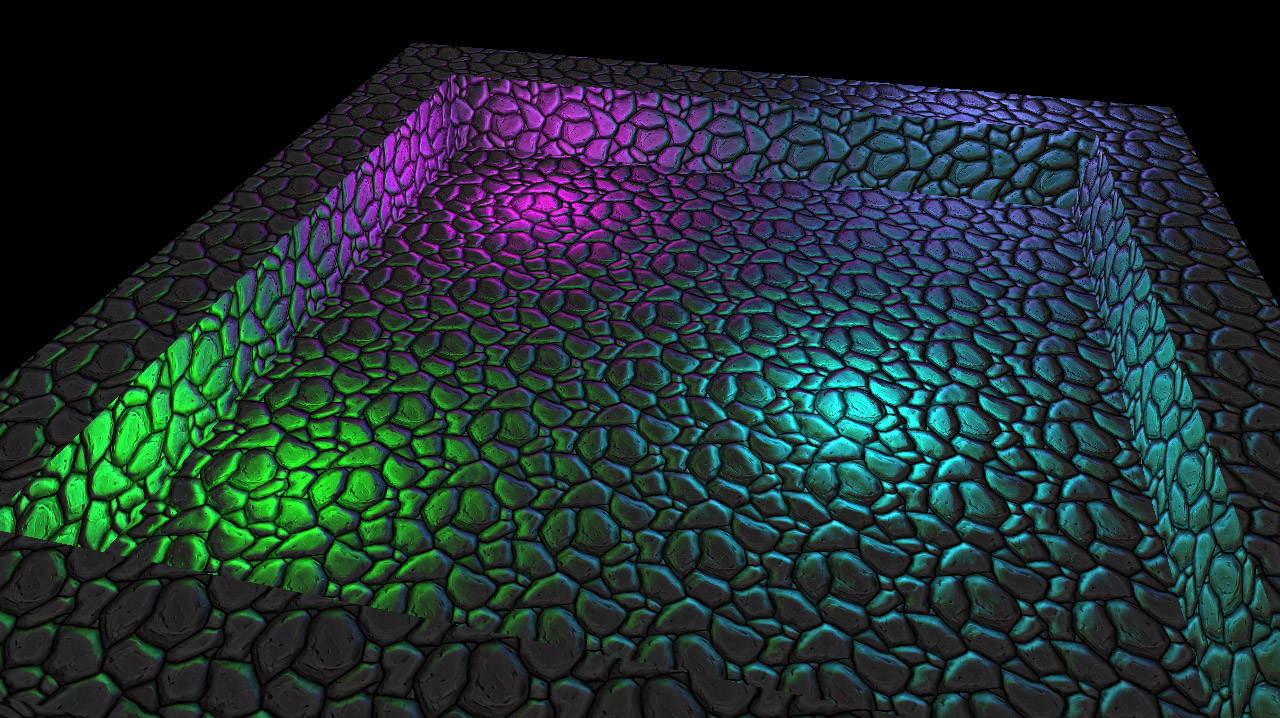

Remember when i posted that i figured out bump mapping? Yeah, i was wrong. The sphere was normal mapped correctly only when the light was at a certain position.

I looked up the problem on google and struggled for 3 days to get the thing working. Basically i needed to convert the light & eye position to texture-space, then do calculations on that.

To do that i needed to calculate the TBN (tangent, binormal, normal) 3x3matrix and multiply lightPos & eyePos by it.

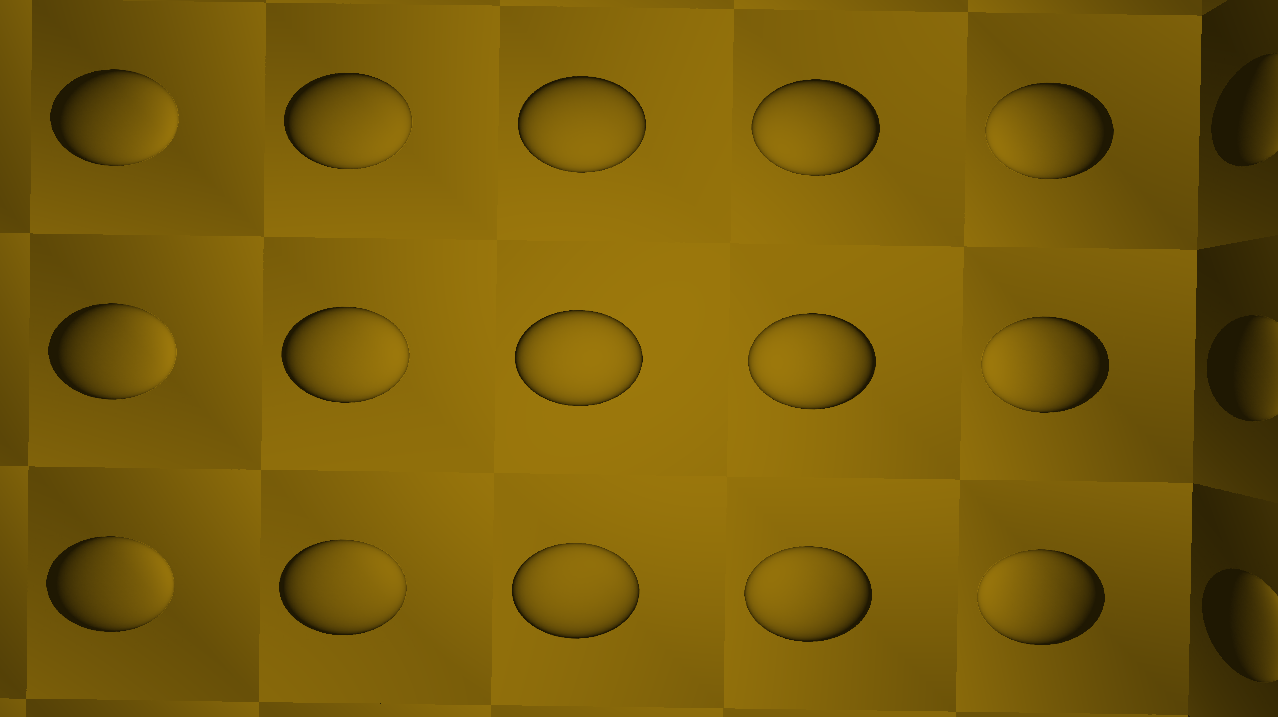

I had a lot of problems on the way, like having some sort of “gradient” on the faces that were rendered —>

I was trying to pull off some sick math to remove it, but then the next day, i deleted the code, so i could start over.

I looked at the code for a bit, and then spontaneously surrounded the tangent vector with a normalize() function. It worked. HALLELUJAH !

If anyone has problems with normal mapping in the future, pm me, i think i can point you in the right direction

Here is the awesome article that made everything clear : http://http.developer.nvidia.com/CgTutorial/cg_tutorial_chapter08.html