I hope I don’t have to break my wallet buying a computer to play these games.

I hope I don’t have to break my wallet buying a computer to play these games.

But is it real?

In an interview after the first video, the creator did say they compress voxel data on the fly, and I also get the strong impression there are only around a dozen unique objects in the new video. So the memory usage could be acceptable for that particular demo.

They claim that you need to understand the games industry today; I actually don’t think they understand it, and this comes across as a very 90s way of thinking about game technology. The reason why the polygon count has barely gone up over the last 5 years is because companies care about it less now. Graphics cards manufacturers have focused on shaders, GPGPU and other improvements. Geometry shaders also aim to solve (or at least mitigate) this issue, by allowing you to simply generate more detail on the fly, and these will become the norm (along with other procedural techniques) in the future. For example how clothes and skin move and reflect light is more important then it’s polygon count.

If/when they get an SDK out, or a working demo, it’ll be interesting to see how usable this is in the real world.

I’m with notch, I will believe it when I see a game made with it.

Still no animations in there video…

No animations = useless. It is the only point I see ;D

Not if you render to an image, and at the same time render the distance to a depth buffer.

Then pass the scene to DirectX or OpenGL for character handling.

But I honestly dont think animation would be much different then scene handling. When a bone moves, a new bounding box would need to be calculated. And when casting the ray in the call to render, you would need to do an extra calculation on the likely group of pixels.

As for data storage. If all models are stored as cube maps with a cubemap for its depth buffer. Object geometry detail would be dependant on distance, and not noticeble by the eye. Photo quality graphics.

Get ready for a Porno Industry revolution.

Although I share a few of Markus/Notch’s concerns, I do think he’s jumping the gun blatantly calling this a scam, based on little more than assumptions and oversimplified calculations. A “wait and see”-attitude would suit him better IMHO.

I don’t really like the hyperbole in those videos either, but I’m still interested in seeing where this is going. Although I’ve seen voxel engines that look better, they might be on to something that actually is useful, even if it will not completely revolutionise the industry.

There are other examples of fairly developed engines or voxel prototypes. Aside from whats in the OP video. If you poke around, there is at least 5-10 that I found within minutes.

Here is just 1

specifically

I honestly think the debate shouldn’t be even be Voxel vs Polygon

but once voxel is a little more polished/developed is a hybrid model of polygon and voxels.

To me its like 2D vs 3D, then we see these 2.5D or 3D worlds rendered in 2D. You use what works for your particular application, even if it involves both systems. Same way many game engines nowadays utilize 2-3+ programming languages.

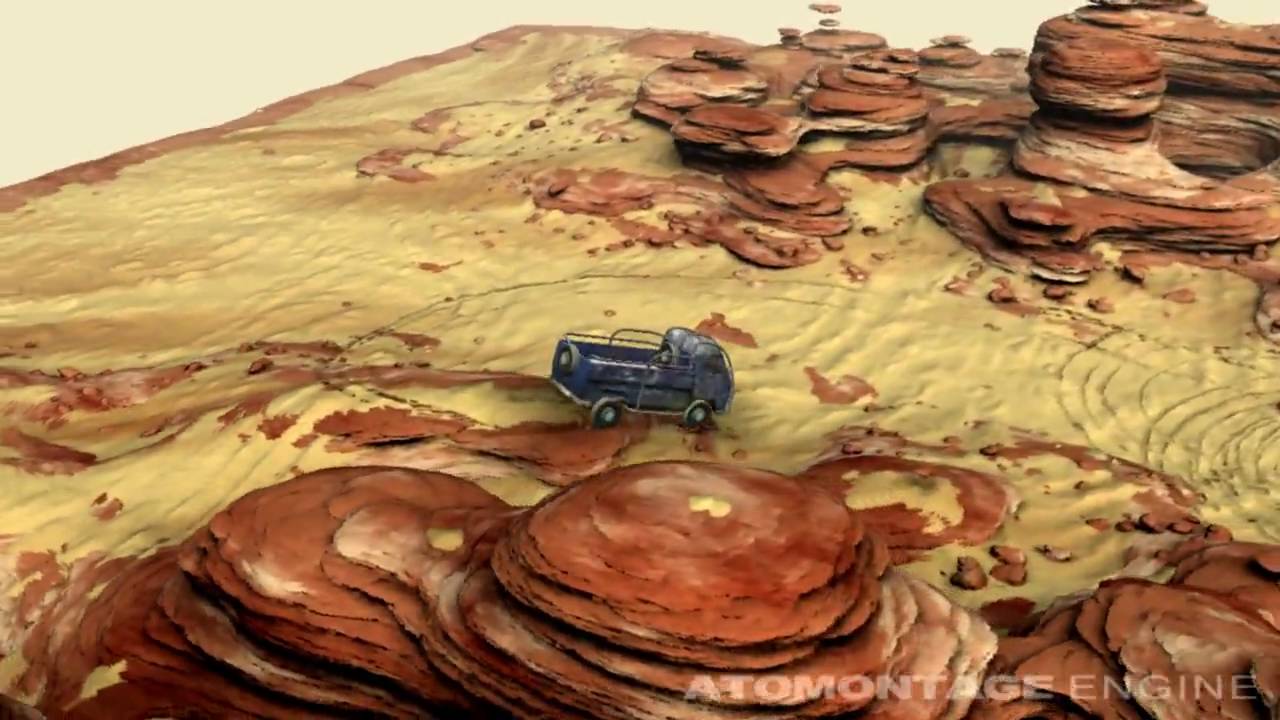

I think voxels serve a great purpose for terrain and other applications. but as others have pointed out, a character model that moves a lot would probably be better to be polygon. As shown with Atomontage, you can have both in the same engine. So things that require a lot of dynamic movements and can easily be solved with polygons. Use polygons.

You could even have some really advanced hybrid system that substitutes back and forth between polygon/voxel depending on level of detail and view distance.

Like all models be polygons but if you zoom into a particular part of something, have it seamlessly and not noticeably visible) switch to a more detailed voxel system.

Edit:

Although its not being released this or even next year. I think sometimes its overlooked that although, sure you may not be able to edit voxels or polygons in certain numbers, billions+ or whatever the number is. The technology that utilizies more power, needs to still be developed before we have the power to fully utilize it. We have seen an incremental increase in computing performance power. There are a lot of really neat technologies that are in research and potential soon commercialization that wont increase your processor by double digits % but by increasing the power potential exponentially in the thousands.

I agree.

Now, Notch criticizes that the developers hype their product and don’t talk about what it cannot do; and that it isn’t revolutionary.

A company will hype a product so people know it and only talk about what it can do very good; thats business and obvious.

I think this whole thing could be one major step towards this kind of graphics.

It gets refined, developed, refined, someone else funds them or buys it, improves it.

Then imagine ID things its interesting, invests, and so on and so forth. Then in 3-4 years you have something that actually works for games beautifully.

Also a note: There is a lot of data, which is one of the main concerns. Well many people know that “soon” almost all games will be cloud-based, like the on-live software/console. And that point, this concern will be even less critical.

It’s not a SCAM, it’s a STEP, into the future. Nobody said we were already there with this product.

I don’t agree on this point… It does static background. It is a big regression. When grass and tree doesn’t move it is sooo dead.

But I honestly dont think animation would be much different then scene handling. When a bone moves, a new bounding box would need to be calculated. And when casting the ray in the call to render, you would need to do an extra calculation on the likely group of pixels.

I double it is that simple. You have to do interpolation on pixel that are commun to several bones so your casting will not be stray.

Well that’s not the point, until there is real animations (what ever is the technical solution) in their demo (grass, tree, vehicul, toon, …) The feeling will be the same that it doesn’t fit for a game.

I have a feeling nobody here actually knows what they’re talking about, that is, the Infinite Detail people. They have figured out something new and clever that no-one has quite done before, and they’re rather excited about it. Everyone slating them is doing so from the basis of some technology that’s been tried before but hasn’t quite worked out. For a start they don’t have a voxel engine, they use point cloud data, which is different. Do they use raycasting? We don’t even know. For all we know it’s a completely new technique. The fact that it does what it does already is pretty remarkable and makes the rather lame looking voxel examples bandied about here somewhat tame.

I suspect what they have figured out is how to compress vast amounts of data in to tiny amounts of memory bandwidth in order to render it, and also they are probably going to use a few tricks in order to do instanced deformation which as people have pointed out is quite crucial and when they demonstrate this I think they’ll have made their point.

If OpenGL had been so massively derided back at the stage when it was in its infancy (“Pah! It doesn’t even do shadows or raytracing reflections!”) where do you think the graphics industry might be today? So let’s just see where this develops. I think it is probably important to bear in mind that this is currently all running entirely in software as seen in the videos at 25fps, and they’re really looking for funding to get their stuff encoded into silicon to see just how much more amazing they can get it to look.

Cas

I have a feeling nobody here actually knows what they’re talking about, that is, the Infinite Detail people. They have figured out something new and clever that no-one has quite done before, and they’re rather excited about it. Everyone slating them is doing so from the basis of some technology that’s been tried before but hasn’t quite worked out. For a start they don’t have a voxel engine, they use point cloud data, which is different. Do they use raycasting? We don’t even know. For all we know it’s a completely new technique. The fact that it does what it does already is pretty remarkable and makes the rather lame looking voxel examples bandied about here somewhat tame.

I suspect what they have figured out is how to compress vast amounts of data in to tiny amounts of memory bandwidth in order to render it, and also they are probably going to use a few tricks in order to do instanced deformation which as people have pointed out is quite crucial and when they demonstrate this I think they’ll have made their point.

If OpenGL had been so massively derided back at the stage when it was in its infancy (“Pah! It doesn’t even do shadows or raytracing reflections!”) where do you think the graphics industry might be today? So let’s just see where this develops. I think it is probably important to bear in mind that this is currently all running entirely in software as seen in the videos at 25fps, and they’re really looking for funding to get their stuff encoded into silicon to see just how much more amazing they can get it to look.

Cas

+1

I have a feeling nobody here actually knows what they’re talking about, that is, the Infinite Detail people. They have figured out something new and clever that no-one has quite done before, and they’re rather excited about it. Everyone slating them is doing so from the basis of some technology that’s been tried before but hasn’t quite worked out. For a start they don’t have a voxel engine, they use point cloud data, which is different. Do they use raycasting? We don’t even know. For all we know it’s a completely new technique. The fact that it does what it does already is pretty remarkable and makes the rather lame looking voxel examples bandied about here somewhat tame.

I suspect what they have figured out is how to compress vast amounts of data in to tiny amounts of memory bandwidth in order to render it, and also they are probably going to use a few tricks in order to do instanced deformation which as people have pointed out is quite crucial and when they demonstrate this I think they’ll have made their point.

If OpenGL had been so massively derided back at the stage when it was in its infancy (“Pah! It doesn’t even do shadows or raytracing reflections!”) where do you think the graphics industry might be today? So let’s just see where this develops. I think it is probably important to bear in mind that this is currently all running entirely in software as seen in the videos at 25fps, and they’re really looking for funding to get their stuff encoded into silicon to see just how much more amazing they can get it to look.

Cas

Well, even with all the great compressing of data, there has to be a limit of exactly how much unique data you can possibly get per byte. Normally that’s not alot, if you need to define even simple stuff, such as positioning in 3D space. For what we use now, that’s 12 byte per unique point.

They also need to color things (and I’m not saying they’re necessarily coloring each point independantly), and that’s also some bytes lost there.

I can’t imagine a way to get that much data out of the eight on/off’s we have got per byte. It just runs up into too much data to handle on a normal computer. :clue:

Just take a look at Atomontage. The developer talks about compression levels of less that one bit per voxel.

Just take a look at Atomontage. The developer talks about compression levels of less that one bit per voxel.

How is that even possible?!? O_o

That’s how compression works

Consider also that this is not voxel technology. It’s point cloud. Each point describes a sphere. You can make them as big or as little as you like. Then you can do all sorts of things to make it look like you’ve got loads more graphics than people think. Consider blending two textures using perlin noise for example. It’s procedurally generated so a tiny amount of memory can be used to make infinite texture. They’re going to need to do something similar; for example the cactus: that’s several simple models drawn on top of each other and rotated and scaled. It doesn’t take a genius to figure out if they can do that they can probably also figure out how to deform and move them in realtime too.

Cas

In fact the smart money is probably on some sort of bone technology animation. I can’t wait to see it working.

Cas

It is a typical discussion between a technical point of view and an industrial point of view there.

If OpenGL had been so massively derided back at the stage when it was in its infancy (“Pah! It doesn’t even do shadows or raytracing reflections!”) where do you think the graphics industry might be today?

Wrong question  There was no competitor of OpenGL at that time that was doing shadows or reflections.

There was no competitor of OpenGL at that time that was doing shadows or reflections.

The question should have been. When the first version of DirectX came out, was there anything that can be done in OpenGL that wasn’t do able in DirectX ? If no, DirectX should be still used now ?

I don’t say that the engine have to have it now, but it have to have it in there first version.

I think it is probably important to bear in mind that this is currently all running entirely in software as seen in the videos at 25fps, and they’re really looking for funding to get their stuff encoded into silicon to see just how much more amazing they can get it to look.

There is the right quetion in fact : what do they want to do ?

- a personnal project : not problem

- a research project : great !

- an industrial project : there’s the problem

If their demo was not real time but with all functionnality of comperitor. I should say : yes ! if they add hardware compression and optimization, there is a high probability to have something great.

At this point, no one can say if they will be able to do something usable.

They are looking for funding for silicon stuff ? It seems to cost a lot… If they look for bank or professional investor, what will be there first question ? Almost none about technic beside the fact they will be able to be competive against other engine, business plan, patents, …

Don’t forget that there is a big thing against them… there are doing something really new and people will have to change the way they are doing things.

Sorry to be too pessismistic, but recently I have seen to mush money (with so mush 0) be wasted because some people don’t undestand that the aim of an industrial project is not to use new technics but to make money. It was really depressing…

Ok, let’s try to be optimistic.

There is no double there are doing great on a technical point of view even if I don’t like the ‘none continuous’ approche of the model.

I hope they will be able to add animaltion in the next video (I didn’t see in this one anything really new).

Where they will succeed I think is in next-gen consoles if they’re going to try silicon. If Sony get interested in what they’re doing…

Cas

![Unlimited Detail Real-Time Rendering Technology Preview 2011 [HD]](https://www.jvm-gaming.org/uploads/default/original/2X/f/f9c00637555bf7fe8b4a5bd97c6d1eace1f20acf.jpeg)