Well according to their new website, we only have another year to wait until they will have a working demo available for people to try. I’ll be staying tuned for that.

This discussion is strange. Nothing is unlimited in computer science and I completely agree with Notch, his objections are plausible. Moreover, it is already difficult to find money for research projects on mesh optimization whereas it would be very useful in near real-time visualization for geoscience, finding money for such a vaguely described project is even harder. They don’t need anything in silicon to show at least a tiny animation and some people here are underestimating the difficulty of adapting point cloud based solutions for dynamic meshes.

Its not technically unlimited. Just like Google doesnt technically hold all the data on the internet. Its just a advanced culling algorythm done on ever pixel. Instead of the Hardware Buffer approach, its all software.

Also I dont see how hard it would be to store meshes broken up into (rgb)cube diffuse maps + (grayscale)cube depth map + (rgb)cube normal map. As all meshes are basically 1 sided hollow objects.

Not saying this is how the engine does it. (Just exploring ideas)

Using this method: objects can be scaled down without problem, they are given a bounding box by default. the processor challenge comes when firing the ray into the depth map, It shouldnt be hard to group data in a cleverly indexed way.

Therefore they have to give it another name.

In my humble opinion, point cloud algorithms fit well for some kinds of data, voxels are fine for some others, it is the same for polygons. Therefore, such an engine should convert point cloud objects into polygons or the opposite, known algorithms to do that cannot be applied in real-time.

Why is there absolutely no animation in their demos? They are not credible.

Strange that nobody has mentioned that Id tech 5 is a non-polygonal voxel like technique. WRT unlimited: Up the the precision of ones representation procedural techniques are ‘unlimited’. I could easily generate more unique images than anyone could look at in a lifetime…so I think it would be reasonable to call that unlimited image generation.

Wouldnt it depend on the resolution of data. If pixels (atoms) are storred at a further distance from each other, and a ray collides between pixels with a normal facing towards the ray, it would make sense that the resulting color would be a gradient between the atoms.

I think it makes sense that static objects are going to be easyer when developing an engine. But like I said before, even if scenes were static, you could always send a texture buffer, and depth buffer to hardware to handle post polygon rendering.

I believe your thinking of Id Tech 6, and John Carmack has said that the technology needed to power it (in the mainstream) does not yet exist.

Unlimited Detail would be unlimited if it was generating more detail on the fly, and the amount of data needed to be generated was based on the number of pixels. I’m thinking like a fractal, you can zoom in infinitely on one of them (within reason).

There have been games in the past that have mixed very different rendering techniques, and they often end up looking odd. For the floor and far away scenery, it would probably look fine, but if you have low-poly people next to high-poly buildings then it would look strange.

Yeah…I think it is 6 now that you mention it. When has Id (at least since Quake) released an engine that runs on mainstream hardware? Huh?? My attempted point is that a lot of people are thinking non-polygonal these days…so it’s far from insane. I have no opinion on the “tech” of the original post (seeing I’m too lazy to watch a video).

I haven’t looked at it but Nvidia has a demo here of sparse voxels: http://code.google.com/p/efficient-sparse-voxel-octrees/

One specific aspect they draw attention to is how they can model each grain of sand on a beach down to what they say is sub-millimetre scale. That’s pretty unlimited relative to what is currently possible even with voxels let alone fudging with bump maps on triangles.

The way they are doing this is clearly not storing every single grain of sand in memory. They have maybe one grain of sand, and then they have an ingenious way of recursively referencing it to make bigger objects out of smaller objects out of smaller objects out of smaller objects, which appear to be overlappable and scaleable and rotatable. This I surmise merely from looking at their video. How they do it is of course the big question. As nobody here has even the faintest clue really it’s best just to wait and see what they do with it because it’s just brilliant looking.

Also: I have seen animation from them, over a year ago. I think it was a very badly modelled hummingbird. As they say, they’re not artists

Cas

I fully agree, as I said above I can only see about 10 distinct items (maybe less). I think that is what might be key here. Having a couple of point cloud objects, that might work ok on a modest PC, but for any real game you would need hundreds.

I’m pretty sure they can fit thousands into RAM - remember they don’t need textures, so all that RAM normally wasted on diffuse textures, glossmaps, bumpmaps, etc - that’s all free to store point cloud geometry instead.

Naive view - if it’s 1 vertex3f, an RGBA colour and a float radius for each point, they could store 56 million points in a gig of RAM (or disk space or whatever). That’s a lot of objects at rather high resolution I think.

Cas

Which will be fine if the size on disc increases linearly with the number of unique objects. (as it would if it’s implemented in a way similar to princec’s suggestion)

Naive view - if it’s 1 vertex3f, an RGBA colour and a float radius for each point, they could store 56 million points in a gig of RAM (or disk space or whatever). That’s a lot of objects at rather high resolution I think.

If we had a resolution of 1 point per cubic millimetre, and build a perfectly smooth cube shaped ‘building’ which is 10x10x3 meters in size, that is 3,000,000 points. By that estimation, n 1gb of ram you can fit around 18 of them. That excludes building details (cracks, windows, door frames, surface bumps) which would use dramatically more points, being able to go inside the building, random objects, the floor, trees, stuff in the background, underground stuff (i.e. sewers), people and animals. Plenty of games, today, have all of that and more in an area.

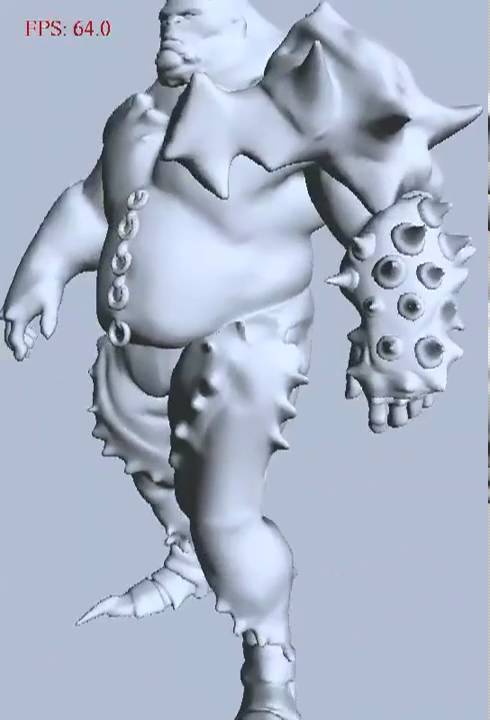

But their latest demo was at ‘64 atoms per cubic millimetre’, not 1, so you’d actually have only just over a quarter of a building. If we take compression into account, perhaps can get them down to just 0.5 bit’s per point, then you’d have 4.6 89 entirely plain buildings in 1gb of ram. That’s a lot better I had originally thought, but considering there is nothing else, it’s still not a huge amount. That is also with the very optimistic presumption of having such a high rate of compression for storing xyz, radius and rgba together.

For a whole gig of ram, that doesn’t sound like much to me. They also said they were starting to move this onto the graphics card, so that really would need to fit into 1gb of video ram, and according to the Steam hardware survay, 92% to 96% of Steam users have a PC with 1gb of video ram or less. They also heavily implied that this will run perfectly fine on current technology, even saying they want to have it running on the Wii and on mobile phones.

I can believe the project is entirely real, with real plans to get it out, and it’s just being heavily over-sold (the whole ‘unlimited detail’ running perfectly on today’s computers). But the numbers just don’t add up for this to be working in the next year. I’d expect the quality will probably drop, and hardware estimates will go up, when their running demo is eventually released.

Model the bricks from which the building is constructed (215 × 102.5 × 65 mm), rather the building as a whole.

Model small objects with point cloud data, and duplicate them to build larger constructs.

It has pleasing parallels to real world construction.

Model the bricks from which the building is constructed (215 × 102.5 × 65 mm), rather the building as a whole.

Model small objects with point cloud data, and duplicate them to build larger constructs.

It has pleasing parallels to real world construction.

Exactly. You model 2 or 3 different bricks. Then you model 2 or 3 different sections of wall with 4x8 bricks. Then you model 4 walls out of several sections. It’s not hard to see how you’d only need a tiny fraction of that original estimate. It’s also easy to see how you could model every grain of sand in the beach and zooming in to look at it you’d probably never know it was about 20kb of data.

Cas

Resolving searches into overlapping point clouds must be an interesting problem; I guess models further into the transformation tree take priority over their parents. (so you can start out with a simple flat surface, and deform it both positively and negatively using successive children)

Though it’s anybodies guess how overlapping point clouds between two siblings in the transformation tree are handled. (for instance if the elephant statues seen in the demo were placed intersecting one-another by their parent transform)

Perhaps that’s just an assets issue; i.e. don’t do it!

I wonder if the resolution of every object’s point cloud in a given world has to be the same, or if it can vary per object.

I have a feeling they can arbitrarily scale and rotate their point clouds.

Damnit I really want to know how it works

Cas

Buy a 20k system that can process billions of poly really fast then stream that to clients over a custom internet backbone.

It is just me or is this not really ground breaking. On Live does is with current games and VNC did it 30+ years ago. It’s called video streaming with remote input.

That’s all it is, and honestly it’s not a bad idea. On Live seems to do pretty well, but it is mainly for rich people.

You mean rich people who don’t want to pay $1200 for a decent computer but pay $400+ a month + the On Live monthly cost for good enough Internet to play the same game in 720p with video compression and input delay? I would change that to rich stupid people.