Hello.

I’m taking a look at the pathfinder we have for RF, and to my horror I realized that it had a super bad worst case scenario for memory usage. At worse, this memory usage could skyrocket to over a gigabyte of memory! >__<

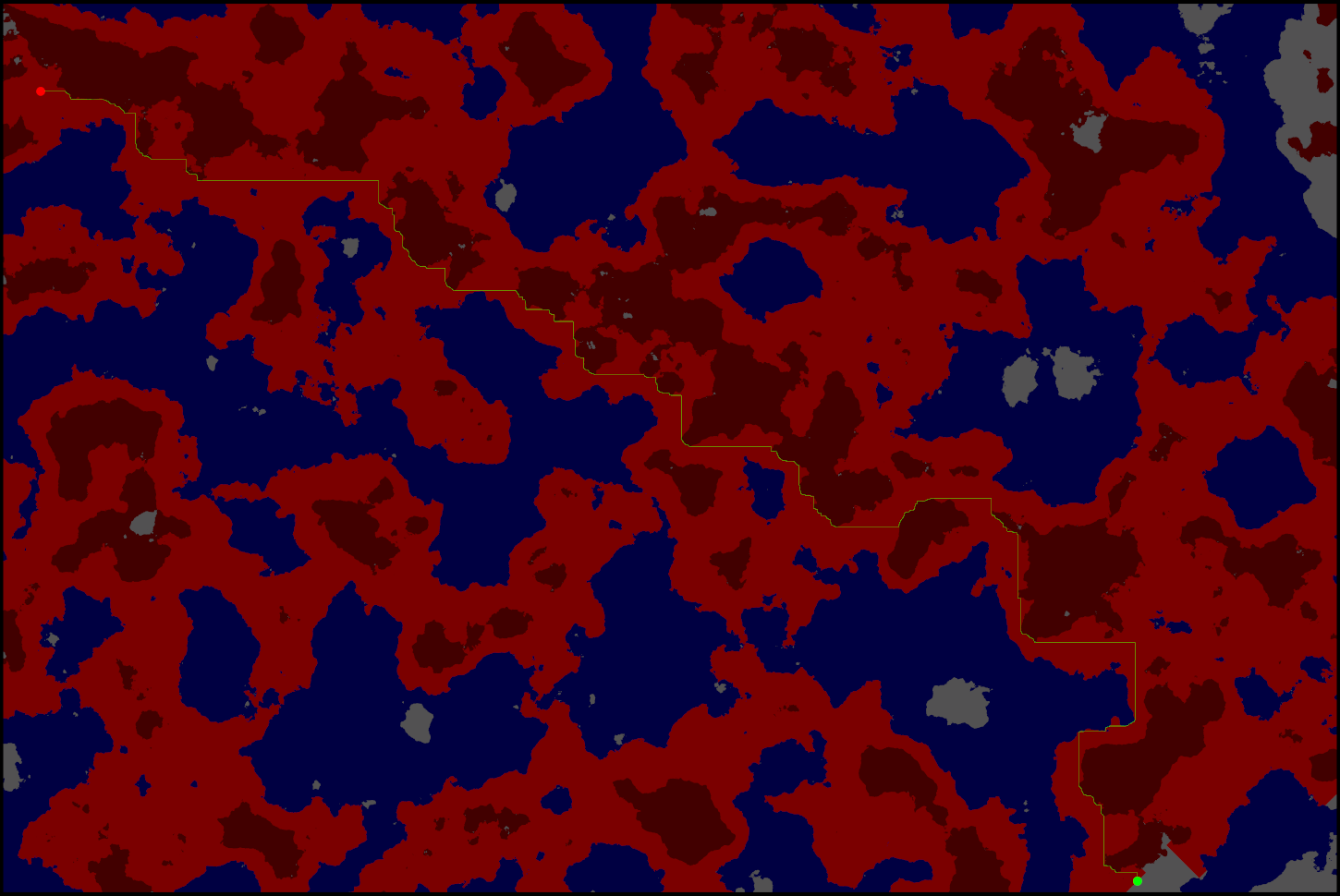

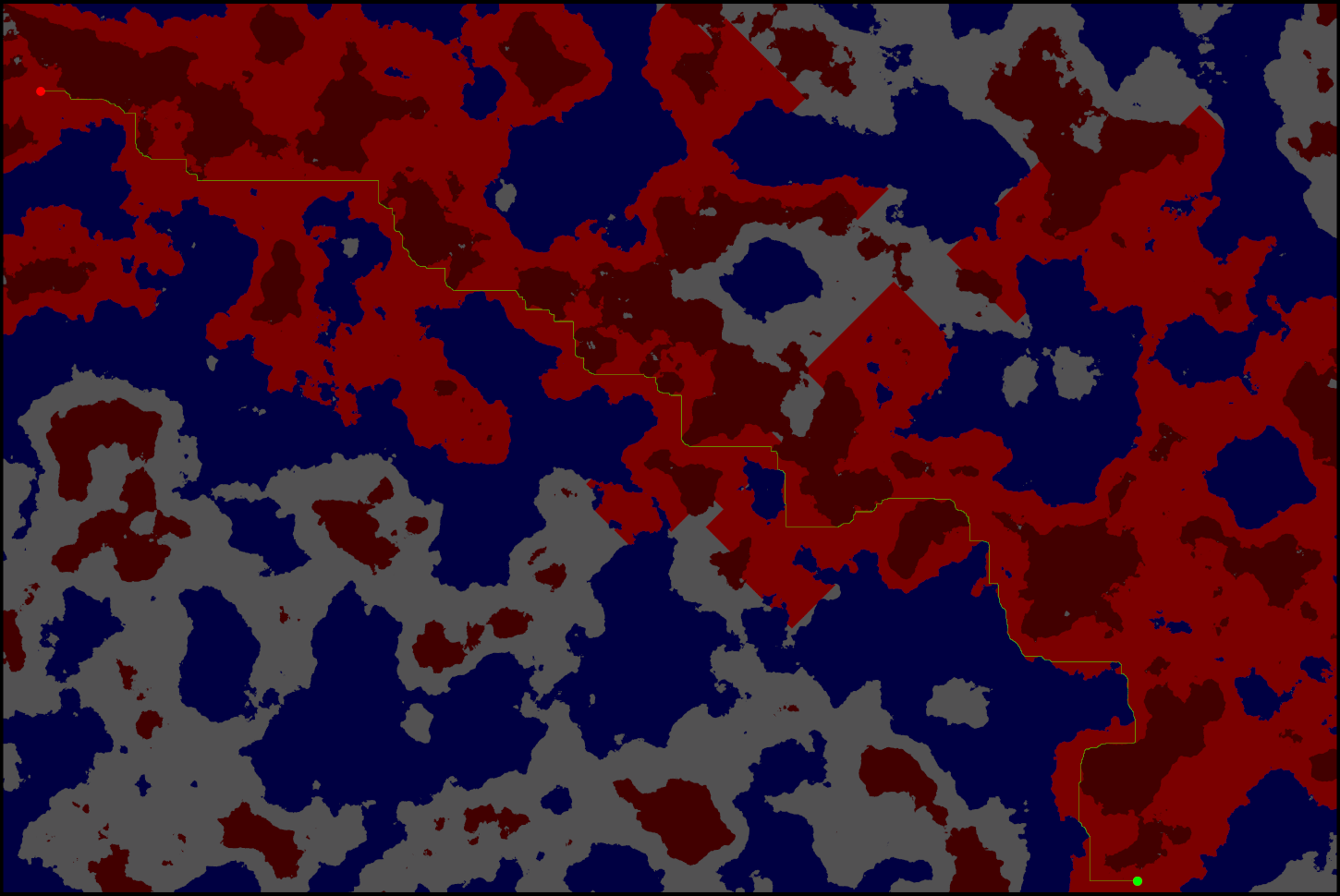

The problem is that our worlds are really big, up to 7680x5120 or so. The game world is essentially a grid, and the data is split up into chunks of 32x32 tiles that can be paged to disk when they aren’t used. The pathfinder I’m using is a bi-directional breadth first search that searches from both the start and the goal and finds where the searches meet to reduce the number of tiles that get checked. For each tested tile, the tile is checked if it’s accessible, which may require loading in data from disk. The last N chunks are cached in RAM until a new chunk is needed. The pathfinding may potentially need to explore the entire map to find a path (or admit failure), but this is rare (yet still possible).

The problem I’m having is that the pathfinding requires a huge amount of data per tile. For each visited tile, I need to know if it’s been opened by the start search and the goal search, which I store using a job-ID system to avoid having to clear nodes. Secondly, to get neighbors and be able to find neighbors and to backtrack, I need to track the XY position (stored as shorts right now) and two parent references. This all sums up to 28 bytes per tile. Hell, just the array for holding the nodes of the entire world is huge, as 768051204 = 150MBs. The tiles then add an additional 7680512028 = 1050MBs for a total of 1200MBs of raw pathfinding data. It’s insane! The entire point of the chunk system goes out the window. In addition, I cannot only track pathfinding data of loaded chunks as if the search is wide we’d start unloading the chunks looked at at the start of the search (the start and finish), which would mean that we would be unable to backtrack. There’s no way of telling which nodes we’ll need for the final path.

I tried limiting the search area to reduce memory usage. The idea was to preallocate a square area of nodes. This square grid of nodes would then be centered on the average of the start and goal coordinates so that the search could run with “local” nodes. This obviously limited the maximum distance between the start and the goal, and also made it impossible to find paths that need to venture outside the square area of preallocated nodes. In the end, I realized that less than 1000 tiles of search distance in all directions (2048x2048 square search area) was the minimum I could drop it to, and that’s still hundreds of megabytes of data.

The thing is that the number of traversible tiles is pretty low. Most of the world is either ocean, mountains or forest, so if I could eliminate those tiles I could cut memory usage by a lot. However, the 150MBs of memory needed just for the array is a lot, and it’s so useless when a majority of the elements will be null anyway. If I could find a better way to store the pathfinding objects than in an array, I could eliminate a lot of that data. Similarly, if I can avoid allocating 70% of the nodes as they’re not traversible, That would cut memory usage from 1050MBs to ~350MBs. Finally, I could reduce memory usage of the node class from 28 bytes to 20 bytes, another 100MBs saving for a final total of 250MBs. That would be… acceptable.

So I guess my real question is: What’s a good data structure for storing a very sparse/lazily allocated grid? It needs to quickly be able to retrieve a node given its coordinates (or null if none exist). Maybe a custom hashmap that doesn’t use linked lists to resolve collisions? Any other ideas?