Relevant previous threads

Introduction

When Riven posted his cool new buffer mapping approach a year ago I realized how important data uploading to the GPU was when it comes to performance, and I’ve been using that approach since then. Recently Nvidia published some slides on reducing OpenGL hardware using the latest OpenGL extensions, and one thing they talk about is how to improve buffer mapping performance. Trying to implement this I encountered funny interactions with driver multithreading and instancing, but I believe I have finally emerged at the top! Sadly, the required functionality has only been implemented by Nvidia so far.

What’s wrong with unsynchronized mapped buffers?

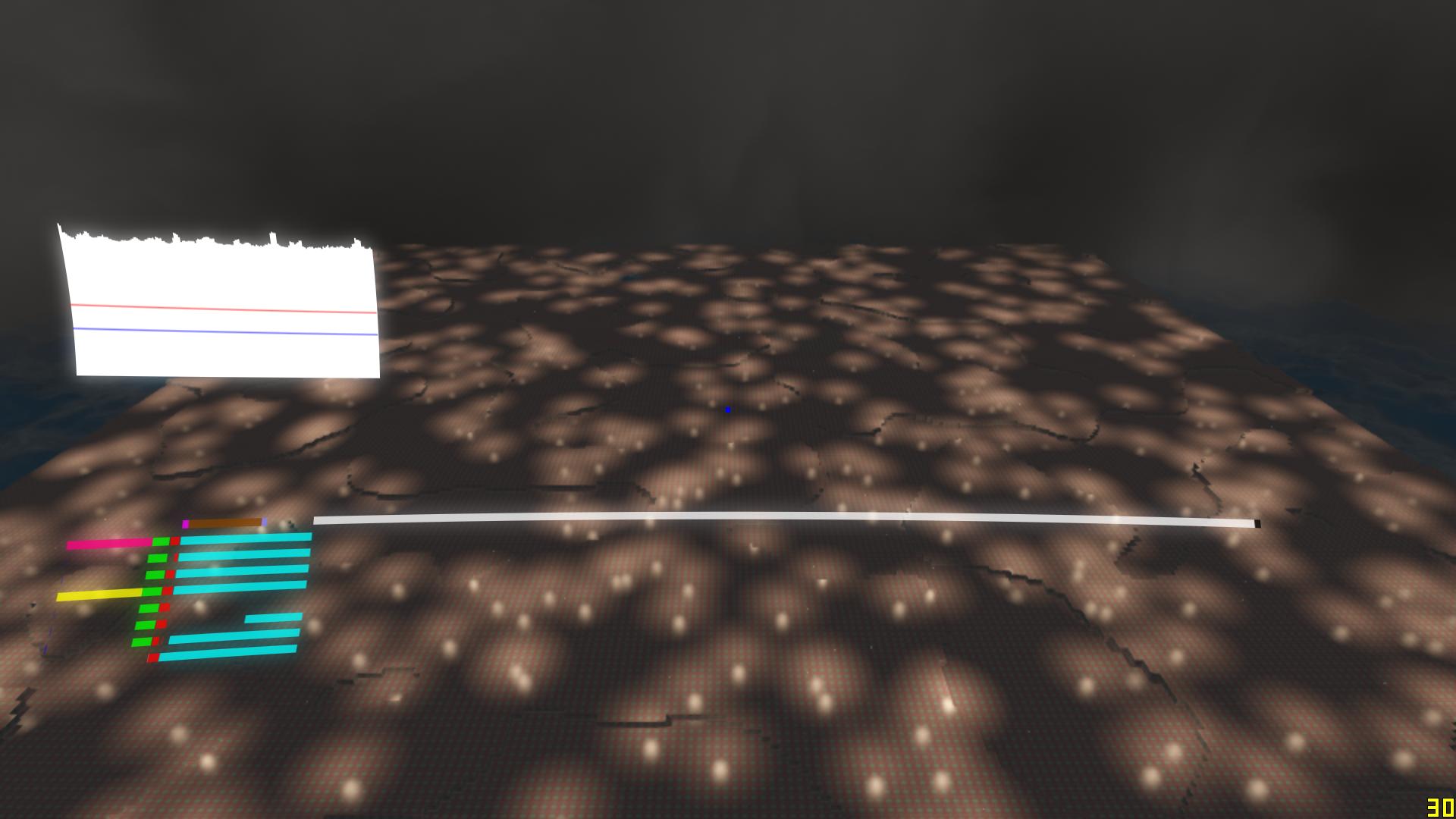

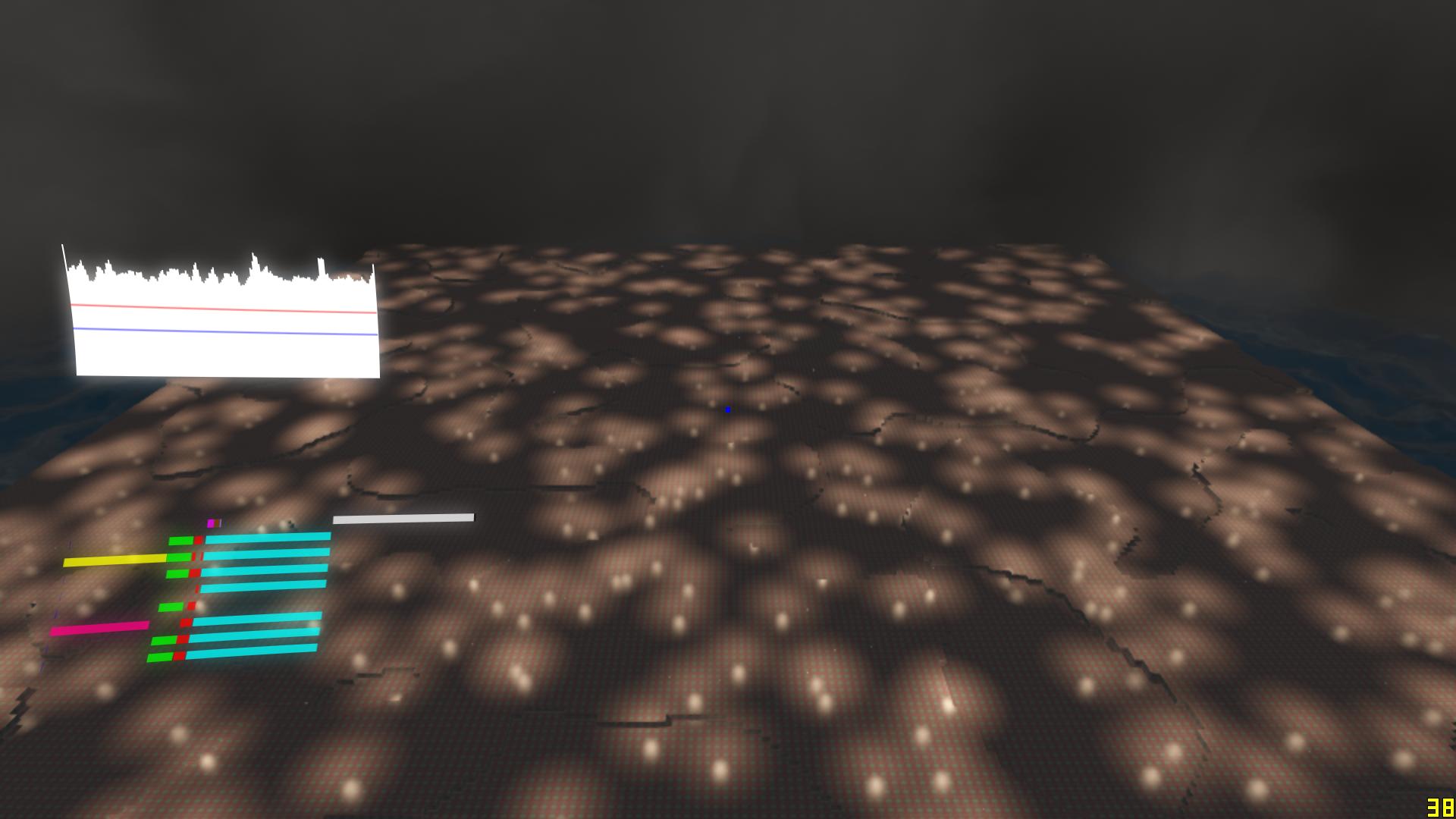

When I was first looking through the slides I was genuinely surprised to see that they claimed that mapping buffers was slow, so I decided to do some heavy profiling in the graphics engine I’m working on. I created a scene with a massive amount of shadow-casting point lights. The scene then has to be culled and rendered for each light 6 times to generate shadow maps for those lights, which is an extremely CPU intensive task. The most expensive OpenGL calls were glDrawElementsInstanced() at 27.7% (!) and glMapBufferRange() at 11.1% of the CPU time. Sure, buffer mapping is a real performance hog, but it’s not much compared to frustum culling at around 25-30%. The test was rendered at an extremely low resolution, so the GPU load was at around 70%, indicating a CPU bottleneck.

What got me thinking was the claim Nvidia made that mapping a buffer forces driver server and client threads to synchronize. What server thread? In the Nvidia control panel, there is a setting called “Threaded optimization” which controls driver multithreading. I have been keeping this at “Off” since when left at the default setting “Auto” it can completely ruin performance in some of my programs. Forcing it to “On” caused performance to drop by 40% just as I remembered, but the profiling results were completely different. glMapBufferRange() and glUnmapBuffer() now account for 51.1% and 10.5% respectively. Holy shit! Another surprising entry is glGetError() which I call just twice per frame which take 9.4% of the CPU time. These 3 OpenGL functions take up 71.0% of my CPU time! glDrawElementsInstanced() on the other hand is now down to 0.2% of the CPU time. What the hell is going on here?!

From what I can see Threaded optimization adds an extra driver thread which all OpenGL commands are offloaded to. This extra threads complicates the OpenGL pipeline even further:

Before:

Game thread owning the OpenGL context --> OpenGL command buffer --> GPU

Now:

Game thread owning the OpenGL context --> Driver server thread —> OpenGL command buffer --> GPU

Something you learn very quickly when working with OpenGL is to avoid certain functions like glReadPixels() (without using a PBO of course) that force the CPU to wait for the GPU to finish working since it forces the CPU and GPU to synchronize. Similarly, mapping a buffer forces the game thread to wait for the server thread to finish any pending operations, stalling the game thread until the buffer can be mapped. What we’re seeing is not glMapBufferRange() becoming more expensive; we’re seeing a driver stall! Using unsynchronized VBOs eliminates the synchronization with the GPU (to ensure the data is no longer in use), but the internal driver thread synchronization cannot be avoided this way.

The solution

The solution is actually ridiculously simple. Don’t map buffers, or rather, don’t map buffers every frame. The OpenGL extension ARB_buffer_storage allows you to specify two new buffer mapping bits, GL_MAP_PERSISTENT_BIT and GL_MAP_COHERENT_BIT. Before ARB_buffer_storage it was impossible to use a buffer on the GPU while it was mapped. GL_MAP_PERSISTENT_BIT gets rid of this restriction, allowing us to simply keep the buffer bound indefinitely. GL_MAP_COHERENT_BIT ensures that the data we write to the mapped pointer is immediately visible to the GPU without having to manually flush anything. Together, they completely eliminate the need of any buffer mapping except for when the game is first started when used in an unsynchronized rotating manner, just like with Riven’s approach. Since the new method is so similar to Riven’s approach, it’s easy to create a generic interface which can be implemented both with unsynchronized VBOs and persistent VBOs. In the following code, map() is supposed to expand the VBO in case it is not large enough to satisfy the requested capacity. Buffer rotation is handled elsewhere.

public interface MappedVBO {

public ByteBuffer map(int requestedCapacity);

public void unmap();

public void bind();

public void dispose();

}

Here’s an implementation using unsynchronized VBOs. The buffer is simply mapped each frame using GL_MAP_UNSYNCHRONIZED_BIT.

public class UnsynchronizedMappedVBO implements MappedVBO{

protected int target;

protected int vbo;

protected int capacity;

protected ByteBuffer mappedBuffer;

public UnsynchronizedMappedVBO(int target){

this.target = target;

vbo = glGenBuffers();

glBindBuffer(target, vbo);

glBufferData(target, capacity, GL_STREAM_DRAW);

capacity = 0;

}

@Override

public ByteBuffer map(int requestedCapacity){

glBindBuffer(target, vbo);

if(capacity < requestedCapacity){

setCapacity(requestedCapacity);

}

mappedBuffer = glMapBufferRange(target, 0, requestedCapacity, GL_MAP_WRITE_BIT | GL_MAP_UNSYNCHRONIZED_BIT, mappedBuffer);

mappedBuffer.clear();

return mappedBuffer;

}

void setCapacity(int requestedCapacity) {

capacity = requestedCapacity * 2;

glBufferData(target, capacity, GL_STREAM_DRAW);

}

@Override

public void unmap(){

glUnmapBuffer(target);

}

@Override

public void bind(){

glBindBuffer(target, vbo);

}

@Override

public void dispose(){

glDeleteBuffers(vbo);

}

}

With persistent VBOs, map() no longer needs to actually map the buffer! We map the buffer once when its created and then we simply return the same ByteBuffer instance when map() is called! If the buffer is too small, we will need to reallocate the buffer and remap it though.

public class PersistentMappedVBO implements MappedVBO{

protected int target;

protected int vbo;

protected int capacity;

protected ByteBuffer mappedBuffer;

public PersistentMappedVBO(int target) {

this.target = target;

capacity = 0;

}

@Override

public ByteBuffer map(int requestedCapacity) {

glBindBuffer(target, vbo);

if(requestedCapacity > capacity){

setCapacity(requestedCapacity);

}

mappedBuffer.clear();

return mappedBuffer;

}

protected void setCapacity(int requestedCapacity) {

capacity = requestedCapacity * 2;

if(mappedBuffer != null){

glUnmapBuffer(target);

glDeleteBuffers(vbo);

}

vbo = glGenBuffers();

glBindBuffer(target, vbo);

glBufferStorage(target, capacity, GL_MAP_WRITE_BIT | GL_MAP_PERSISTENT_BIT | GL_MAP_COHERENT_BIT);

mappedBuffer = glMapBufferRange(target, 0, capacity, GL_MAP_WRITE_BIT | GL_MAP_PERSISTENT_BIT | GL_MAP_COHERENT_BIT, mappedBuffer);

}

@Override

public void unmap() {

//PERSISTENT MAGIC

}

@Override

public void bind() {

glBindBuffer(target, vbo);

}

@Override

public void dispose() {

glDeleteBuffers(vbo);

}

}

Instancing?!

For a long time I’ve been trying to get Nvidia to fix their broken instancing performance. The whole point of instancing is to reduce the CPU overhead of drawing multiple identical objects in different locations, but currently instancing is much slower than simply batching together a few “instances” into a single VBO and rendering 64 at a time using a normal glDrawElements() call. Instancing is so slow that I actually implemented an Nvidia specific renderer for parts of my engine to avoid this problem. I’m happy to report that for one reason or another, instancing performance is through the roof when using persistent mapped buffers, matching or surpassing that of my Nvidia specific renderer!

Aaaaand finally some numbers

Threaded optimization off, using unsynchronized VBOs

45 FPS, 22.222 ms/frame

Threaded optimization off, using unsynchronized VBOs, using Nvidia specific renderer (no instancing, avoids most buffer mapping)

75 FPS, 13.333 ms/frame

Threaded optimization on, using persistent VBOs

88 FPS, 11.363 ms/frame

Conclusion

Although persistent buffers are a bit faster when it comes to raw speed, their other advantages are much more interesting. By allowing efficient use of threaded optimization (which gets enabled automatically when left at the default “Auto”) the game’s thread is free to do other things. In fact, around 25% of the frame time with persistent VBOs is due to Display.update() stalling due to the extra driver thread still being busy, meaning that I could add quite a bit of game logic in the main thread without affecting my game’s frame rate. The massive improvement to instancing is also great, and responsible for most of the performance improvement. That means that I no longer have to maintain two separate renderers.

(nvidia gtx280)

(nvidia gtx280)