Hello everyone, I’ve been running into some trouble recently trying to improve the framerate I get rendering my scene. If anyone could help out, that would be fantastic. I’ve also got this plea for help posted over on StackOverflow http://stackoverflow.com/questions/31361673/how-do-i-improve-libgdx-3d-rendering-performance if anyone would rather post there. Here’s the question:

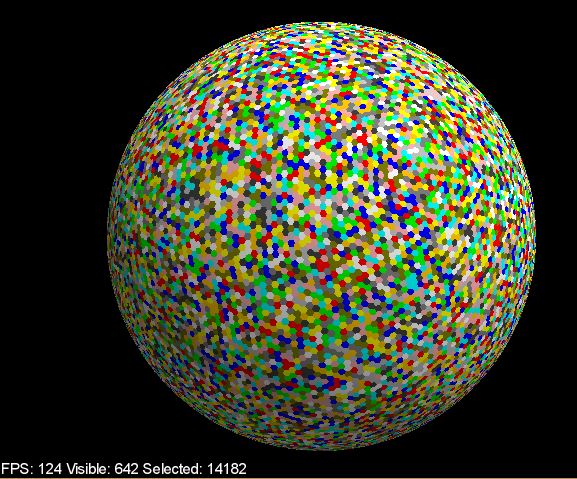

I’m working on rendering a tiled sphere with LibGDX, aimed at producing a game for desktop. Here are some images of what I’ve got so far: http://imgur.com/GoYvEYZ,xf52D6I#0. I’m rendering 10,000 or so ModelInstances, all of which are generated from code using their own ModelBuilders. They each contain 3 or 4 trianglular parts, and every ModelInstance corresponds to its own Model. Here’s the exact rendering code I’m using to do so:

modelBatch.begin(cam);

// Render all visible tiles

visibleCount = 0;

for (Tile t : tiles) {

if (isVisible(cam, t)) {

// t.rendered is a ModelInstance produced earlier by code.

// the Model corresponding to the instance is unique to this tile.

modelBatch.render(t.rendered, environment);

visibleCount++;

}

}

modelBatch.end();

The ModelInstances are not produced from code each frame, just drawn. I only update them when I need to. The “isVisible” check is just some very simple frustum culling, which I followed from this tutorial https://xoppa.github.io/blog/3d-frustum-culling-with-libgdx/. As you can tell from my diagnostic information, my FPS is terrible. I’m aiming for at least 60 FPS rendering what I hope is a fairly-simple scene of tons of polygons. I just know I’m doing this in a very inefficient way.

I’ve done some research on how people might typically solve this issue, but am stuck trying to apply the solutions to my project. For example, dividing the scene into chunks is recommended, but I don’t know how I could make use of that when the player is able to rotate the sphere and view all sides. I read about occlusion culling, so that I might only render ModelInstances on the side of the sphere facing the camera, but am at a loss as to how to implement that in LibGDX.

Additionally, how bad is it that every ModelInstance uses its own Model? Would speed be improved if only one shared Model object was used? If anyone could point me to more resources or give me any good recommendations on how I can improve the performance here, I’d be thankful.