Glad to see things clearing up!

[quote]So here we have an example of a tone mapper that doesn’t quite map everything to 0,1; assuming that they are left above 1 (as can clearly be seen in the picture). So is the point not to necessarily get everything to [0,1], but to apply a scaling function to the colors on the screen to ensure the best possible display on an LDR screen? Does this mean that the graphics card/OpenGL does “something” to the colors that are still higher than 1 at render time, to make them displayable? This is the only way for me to rationalize what is going on, because obviously they’re being displayed, and obviously the vibrance is being retained.

[/quote]

No, nothing fancy happens. When you finally write to the LDR backbuffer, they will just be clamped to [0-1]. This isn’t as bad as it might seem though.

[quote]Is the exposure in this case just a flat multiplier to the colors in the backbuffer? i.e. a camera’s exposure is just letting more light come in before closing the shutter. The bright spots get brighter by a larger amount than the dark spots because more light is coming into the lens every second.

[/quote]

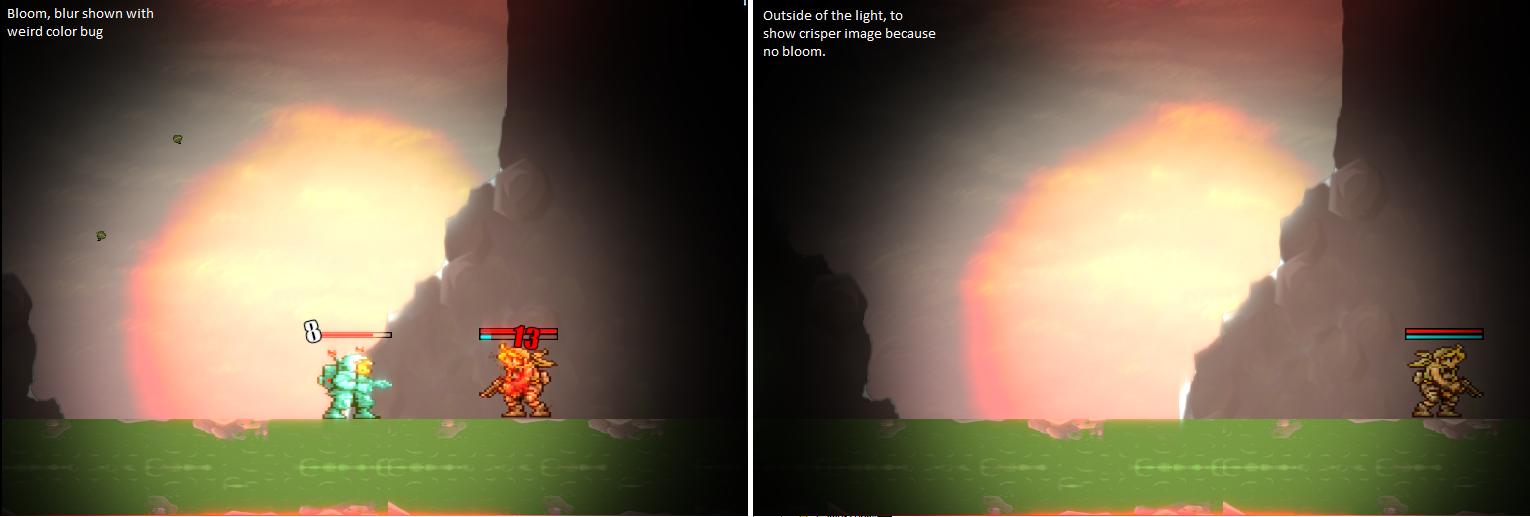

Yes, exposure is just a multiplier. The combination of exposure and bloom will give quite nice results. If you want to see the dark details in a scene, you have to increase the shutter time (e.g. the exposure), but if you have something bright, you’ll just get a very bright image as the bright part blooms over the whole screen. It’s just like a real camera! =D

[quote]This should solve the problem of having to change to additive blending when I am rendering a sprite that I want to flash white.

[/quote]

Indeed, just draw it with glColor3f set to a high value, maybe (10, 10, 10) or so, or just draw it with an alpha of 10, as that would just multiply each color with 10, giving the exact same result. xd

[quote]I am still sort of stuck in the mentality that anything related to glColor must be locked into [0,1] but I guess I’ll just have to slowly get over this.

[/quote]

Get over it, man! You’re too good for LDR!

[quote]Status update on the lighting project: We have everything working correctly with multiple HDR textures, shadow geometry is being drawn (albeit in immediate mode, we still don’t quite get how to do otherwise), and all is fine up until bloom. I’m applying a darkening shader (to remove dark spots) by setting some arbitrary color threshold (say, 1.5 pre-tonemapping), and just doing fragColor = color-threshold, and colors that are below the threshold will simply go to zero (do they actually go negative?). Unfortunately, when I do this for the bloom filter I and enable additive blending, the rest of the scene still ends up black somehow (where the non-bright spots are). I think the problem might be my function, because if I can have negative colors stored on my GPU, and I’m using additive blending, I’m actually subtracting color from the dark areas when I add the bloom map to the scene.

[/quote]

Bwahahahaha! Yes, floats can be negative… xD Ahahahahaha…!

You need to clamp each color channel to 0, but doing it manually would be stupid. Just use the built-in max() function to clamp each channel individually and fast!

gl_FragColor = max( texture2D(sampler, gl_TexCoord[0]) - threshold, vec4(0, 0, 0, 0));

[quote]Multitexturing is also causing me a bit of a headache. I don’t really know what GLSL version we’re using, but instead of texCoord2D[0], I have to do this weird somewhat hacky way of making a vec2 called tex_coord0, 1, whatever, in my vertex shader, and use that in my Texture2D call along with the sampler. I also have no idea what a uniform sampler2D is, and why it feels like sometimes I need to pass it in from the application and other times openGL just magically knows what that variable is.

[/quote]

Yes, in the latest GLSL versions all non-essential built-in variables have been removed. The only ones that are left are the really necessary ones, like gl_Position and gl_PointSize in vertex shaders. Even gl_FragColor/Data have been removed and have to be replaced manually! I think this is good, as you’ll just have the ones you need.

It is pretty rare to have more than 1 texture coordinates. Mostly you’re sampling the same place on 2+ textures (texture mapping + normal mapping + specular mapping for example), so there is no need for 2+ texture coordinates as they will be identical. If you’re sampling from two way different textures, like a color texture and a light map, you will however need 2 texture coordinates.

[quote]I’ve tried glActiveTexture(GL_TEXTURE0), glActiveTexture(GL_TEXTURE1) before each call to bindTexture, so that my shader sees all three textures. So far no luck. I’m not even sure what the final gl_FragColor would be, would it just be the three bloom maps added together? Even though all three textures are a different size, I’m drawing them on top of the same quad (the scene) so it shouldn’t matter.

[/quote]

You might want to call glEnable(GL_TEXTURE_2D); on each active texture unit, but I 99.9% sure you don’t have to when you use shaders.

The reason why single texturing works is that the samplers default to 0 (obviously), so all samplers are automatically bound to the first texture unit on shader compilation. If you want to sample from multiple textures you need to set the samplers manually with glUniformi() to different texture units (that’s 0, 1, 2, 3, etc, NOT texture IDs!!!).

Really, all the twisted fixed functionality was driving me crazy, so I just started working solely with OpenGL 3.0. Sure, it’s a lot more code to get a single triangle on the screen, but it’s much faster and more elegant for anything more advanced than that. No more glEnable crap exploding all the time! Okay, glEnable(GL_BLEND) but that doesn’t count! T_T

[quote]+100 internets for your post. Thanks again.

[/quote]

Jeez, thanks! I’m gonna hang them on my wall! ;D

EDIT: I forgot one thing. You were complaining about your colors not being vibrant. In this awesome article on tone mapping there was a comment in which one of them complained about his colors not being vibrant. He also linked(/made?) this article: http://imdoingitwrong.wordpress.com/2010/08/19/why-reinhard-desaturates-my-blacks-3/ It seems very interesting and relevant. Just look at the screenshots!