Yeah… that clears it up a bit. Implementation is a whole other matter for me, right now I’m having trouble just converting our scene to use an FBO. Right now we draw a lightmap using copytex2d and save it as an opengl texture object, I haven’t gotten to converting this yet. I then create the framebuffer as follows:

ibuf = GLBuffers.newDirectIntBuffer(1);

gl.glGenFramebuffers(1, ibuf);

screenFBO = ibuf.get(0);

tbuf = GLBuffers.newDirectIntBuffer(1);

gl.glGenTextures(1, tbuf);

backbuffer = tbuf.get(0);

gl.glBindTexture(GL2.GL_TEXTURE_2D, backbuffer);

gl.glTexImage2D(GL2.GL_TEXTURE_2D, 0, GL2.GL_RGBA8, 1440, 900, 0, GL2.GL_RGBA, GL2.GL_UNSIGNED_BYTE, null);

gl.glFramebufferTexture2D(GL2.GL_FRAMEBUFFER, GL2.GL_COLOR_ATTACHMENT0, GL2.GL_TEXTURE_2D, backbuffer, 0);

int status = gl.glCheckFramebufferStatus(GL2.GL_FRAMEBUFFER);

System.out.println(status == GL2.GL_FRAMEBUFFER_COMPLETE);

gl.glBindFramebuffer(GL2.GL_FRAMEBUFFER, screenFBO);

This is in our scene’s render method, later we’ll actually encapsulate all of the references to the framebuffers as static instances in our GameWindow so that we can actually just set them up once when we initialize the window and simply make calls to them from our scene’s render to save performance by a lot. This is just for testing however. The framebuffer checks out as complete, and then I simply render my scene as normal. We use a viewport and GLUlookat in order to get things to draw at the right place, and render the scene’s images this way. At the end we just draw a quad over the scene with the lightmap texture and the blend mode (DST_COLOR, ZERO). Then I execute the following:

gl.glBindFramebuffer(GL2.GL_FRAMEBUFFER, 0);

gl.glBindTexture(GL2.GL_TEXTURE_2D, backbuffer);

gl.glGenerateMipmap(GL2.GL_TEXTURE_2D);

gl.glGenTextures(1, tbuf);

gl.glBindTexture(GL2.GL_TEXTURE_2D, backbuffer);

gl.glTexImage2D(GL2.GL_TEXTURE_2D, 0, GL2.GL_RGBA8, 1440, 9, 0, GL2.GL_RGBA, GL2.GL_UNSIGNED_BYTE, null);

gl.glTexParameterf(GL2.GL_TEXTURE_2D, GL2.GL_TEXTURE_WRAP_S, GL2.GL_CLAMP_TO_EDGE);

gl.glTexParameterf(GL2.GL_TEXTURE_2D, GL2.GL_TEXTURE_WRAP_T, GL2.GL_CLAMP_TO_EDGE);

gl.glTexParameteri(GL2.GL_TEXTURE_2D, GL2.GL_TEXTURE_MAG_FILTER, GL2.GL_LINEAR);

gl.glTexParameteri(GL2.GL_TEXTURE_2D, GL2.GL_TEXTURE_MIN_FILTER, GL2.GL_LINEAR_MIPMAP_LINEAR);

gl.glGenerateMipmap(GL2.GL_TEXTURE_2D);

gl.glBegin(GL2.GL_QUADS);

gl.glTexCoord2d(0.0, 0.0);

gl.glVertex2f(0,0); //bottom left

gl.glTexCoord2d(1.0, 0.0);

gl.glVertex2f(0,1440); //bottom right

gl.glTexCoord2d(1.0, 1.0);

gl.glVertex2f(1440,900 ); //top right

gl.glTexCoord2d(0.0, 1.0);

gl.glVertex2f(0,900); //top left

gl.glEnd();

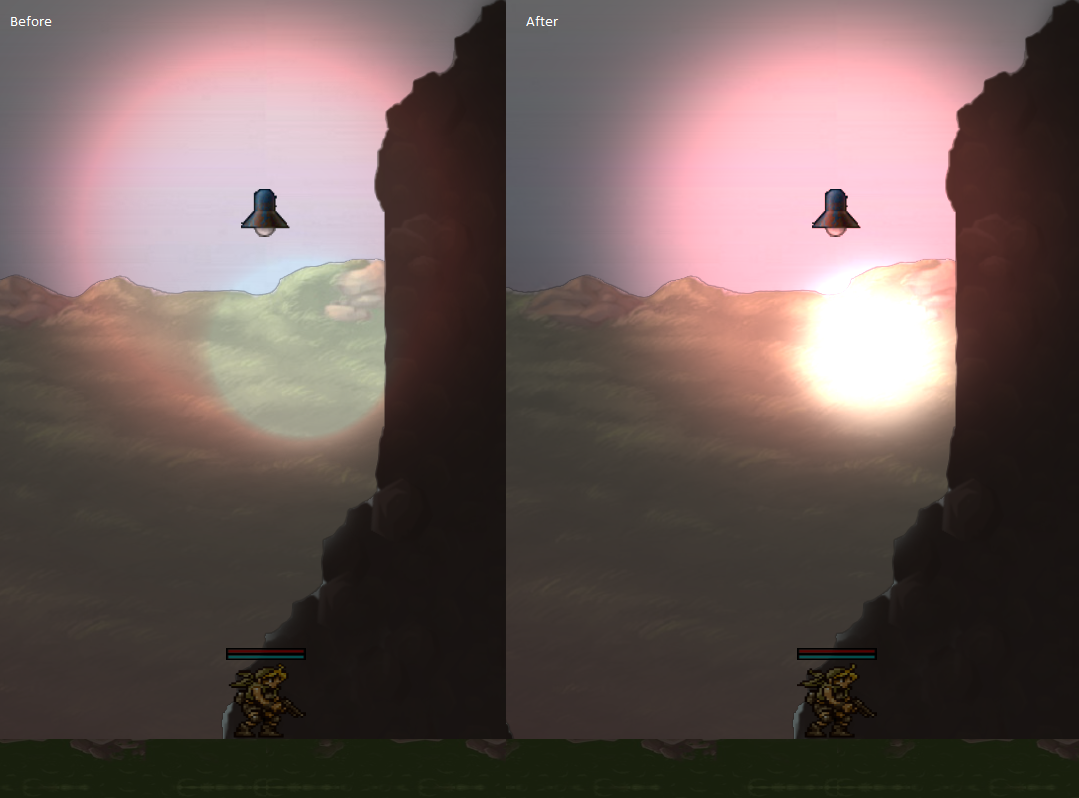

This, I think, draws the contents of the frame buffer to the screen. It’s obviously doing something right, but something with how we’re rendering our scene is preventing it from being displayed along with the lightmap. It’s as though we had GL Blend disabled, but we don’t. The lightmap is simply all that’s being displayed at the end.

Your gluLookAt would (probably) just be equal to glTranslate(playerX - screenWidth/2, playerY - screenHeight/2). Also when doing fullscreen passes, it’s easier to just not use the matrices at all. Just load identity on all of them and send in the vertex coordinates as -1 to 1. It’ll minimize these errors.

Your gluLookAt would (probably) just be equal to glTranslate(playerX - screenWidth/2, playerY - screenHeight/2). Also when doing fullscreen passes, it’s easier to just not use the matrices at all. Just load identity on all of them and send in the vertex coordinates as -1 to 1. It’ll minimize these errors.