Been doing a lot of studying. I crossed the 1/3rd point on a Udemy course on using Hibernate to build an eCommerce site with Java. The course has 253 videos, just got through number 85. (Maybe can complete the course by end of October?)

Android tutorial course I want to finish has been languishing. In late teens and early college years, studying multiple subjects at the same time was par for the course. Now, in my dotage, it’s not quite so easy to keep multiple balls in the air.

Am also learning about this forum admin stuff on the fly, e.g., figured out how to move topics a couple days ago, putting some “newbie resources” questions onto the newbie thread.

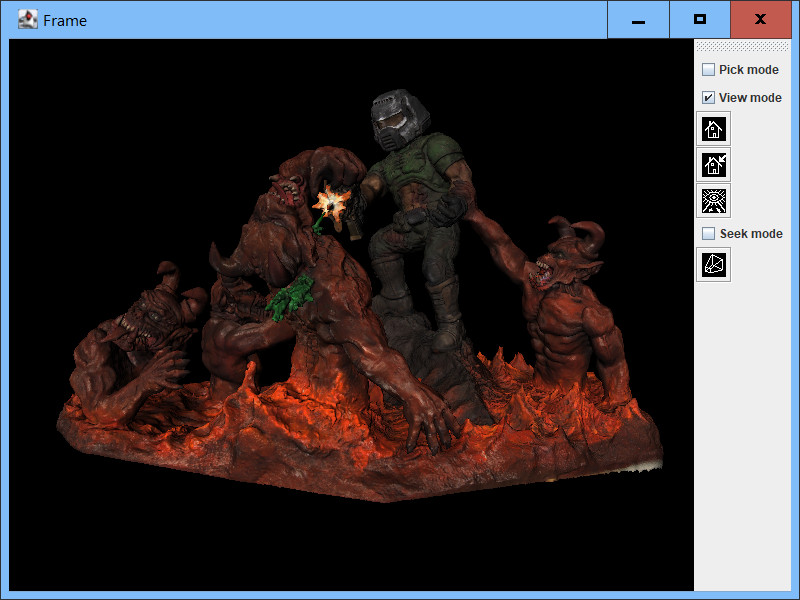

And, I’m making progress on a JavaFX project. Just got through a tutorial and chapter on FXML, and feel like I have a pretty solid grip on it now, conceptually. Need to do a bit more hands on. This morning got through a chapter discussing JavaFX Properties. Part of my goal here is to make some custom controls for an application. I want this application to have something of a SteamPunk look and feel, designed down to its widgets. Maybe not as elaborate as this, but out in that general direction.

This fellow, Yereverluvinunclebert, is some kind of mad genius of design yes?