Today I met a celebrity by chance. Stumbled across @badlogicgames in a little restaurant

Today I resumed work on my real-time path tracing implementations which I started a while ago and added some demos to the LWJGL/lwjgl3-demos repository. After having implemented the first bits of Edge-Avoiding À-Trous Filtering there is a much more promising technique (no, I am not yet talking about Nvidia’s neural network approach to image reconstruction), but about temporal accumulation of previous samples via screen-space reprojection, which effectively increases the sample count in animations. Previously, whenever the camera moved, all accumulations have been discarded which made the image look very noisy during movements.

Reprojection has been used earlier to cache fragment shader calculations in order to reduce run time:

- http://w3.impa.br/~diego/publications/TR-749-06.pdf

-

http://cheetah.cs.virginia.edu/~jdl/papers/reproj/amorn_gh08.pdf

Reprojection has also been implemented in https://research.nvidia.com/publication/2017-07_Spatiotemporal-Variance-Guided-Filtering%3A as well as the more recent master thesis https://dspace.cvut.cz/bitstream/handle/10467/76944/F3-DP-2018-Dundr-Jan-ctutest.pdf submitted to CESCG 2018.

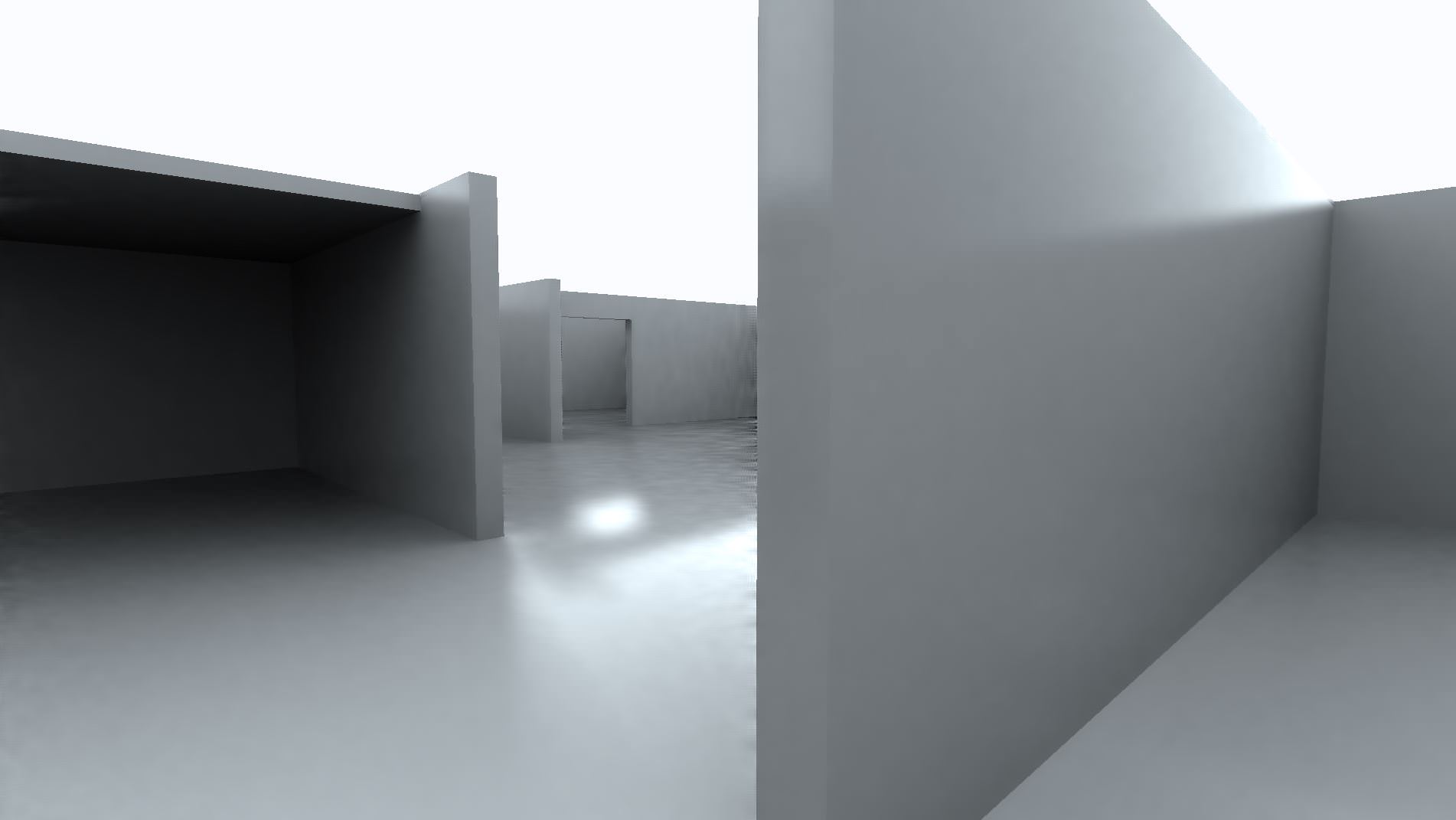

This is a first impression without any sort of spatial filtering (such as variance-guided filtering for the spots of disocclusion where variance is still very high). It just shows accumulation of previous reprojected frames based on the lifetime of that particular sample (time since first disocclusion):

6OpfYU1Mv3E

Bt3XDKrXJY0

-ZiwVIEimRA

v6uqub4IYag

(sorry for the jaggy movements; haven’t implemented smooth camera controls yet

Here are some images of specularity based on fresnel factor:

The thing with path tracing is that cards still need to become ~1000 times faster to avoid any sorts of tricks and allowing to use 1000 samples per pixel. But that is not going to become a reality, so we end up with lots of noise in the traced image, and so far there have only been two promising techniques to get rid of that:

- various image filtering techniques

- image reconstruction (Nvidia proposes to use convolutional neural networks for this)

Since image filtering is basically a solved problem and is absolutely real-time capable, the only thing Nvidia can dramatically improve on is to accelerate image reconstruction using dedicated hardware for neural networks, which is exactly what they are doing with the Tensor chips.

So I think we will see great improvements in real-time path tracing based global illumination and new rendering engines facilitating this in the near future, such as EA’s SEED project which seems to be the most state-of-the-art right now: https://www.ea.com/seed/news/hpg-2018-keynote

Made a little test showing everything being integrated together.

- Model loading

- Game engine

- Physics engine

- Script engine

- Rendering engine

The idea is that the user can import models via the Assets folder. The final asset object is called a Prefab (created from meshes/materials). You can create a game object in the world, which is backed by a prefab. The prefab is just used for drawing. Then you can add a physics object into the game object. When a physics object is inside a game object it “links” to it, which is where it creates a rigid body representation of that game object in the physics engine. The player game object (in the GIF) contains a controlling script (shown below). That captures user-input, and updates the camera to stay with the player.

Here’s the controller for the player:

Still brushing up on what’s hot and what’s not in current ray/path tracing computer graphics. There have been some great papers at the High-Performance Graphics 2018 conference. The second-best voted paper “Gradient Estimation for Real-Time Adaptive Temporal Filtering” discusses how the weights for blending between previous frame and current frame in temporal antialiasing can be adapted based on the change in scene color/light information (light bulb going on and off) or through specular reflections in order to avoid the “ghosting” effect which is inherent in temporal antialiasing techniques.

They do this by re-evaluating a path traced sample from a previous frame using the same random number sequence/seed and checking whether the sample still evaluates to the same color/intensity. If not, then obviously something has changed and the previous frame information should be discarded or at least weighted less based on how much the color/light changed (the gradient) and based on material properties (specular reflections should be weighted even less). Quite a clever solution.

But all papers are really good and should be checked out: https://www.highperformancegraphics.org/2018/best-paper/

Finished everything on vulkan-tutorial.com - after a couple of weeks. I’m going to need to spend a lot more time thinking about how everything in Vulkan even comes together.

I find that Sascha Willems’ Vulkan examples on GitHub are just pure gold: https://github.com/SaschaWillems/Vulkan/tree/master/examples

This is definitely pure gold. Thank you!

There is an absolute ton of content on here, enough to keep me going for months.

I came across this tutorial just now. Wondering what the best place to post a link to it might be. Should it be on the new Deployment wiki?

Its for making a cross platform mobile app with Java/JavaFX. It’s not clear to me yet how much of Java is supported–I think they indicated some of Java 8 won’t work.

How to make a Cross-Platform Mobile App in Java

[quote]Did you know that you can use Java to make cross platform mobile apps? Yes, pinch yourself, you read that right the first time! I’ll teach you the basics of how to use your existing Java knowledge to create performant apps on Android and iOS in 12 easy steps. We will do this all using JavaFX as the GUI toolkit.

[/quote]

Working on the deployment issues has me quite bottled up. I try to push forward with it, but decided to not make that my only Java activity. Since so much time is spent in resisting unpleasant tasks, I decided to start giving myself an hour or two on actual coding of other projects instead of allocating all available programming time to deployment issues. Working on the PTheremin today, to set up an experiment for an alternate sound source that makes use of the phase modulation feedback loops (or rather, simulates them). It would be neat, also, to try an experiment I’ve been wanting to do with coding vowel formants. Better that than simply blocking on the unpleasant tasks of setting up sales site and dealing with certificates and other stuff. Yes?

Still working hard at the next Tutorial8 Path Tracing Demo with temporal and spatial antialiasing. Here are some images of real-time footage at different implementation stages:

1:

2:

3:

4:

5:

- Importance Sampling (1 sample per pixel, 3 bounces) without temporal or spatial filtering (still camera)

- (1.) + temporal filtering (still camera)

- (2.) + edge-avoiding spatial filtering (still camera)

- (2.) + animation (fast strafe to the left)

- (3.) + animation (fast strafe to the left)

The newly disoccluded samples in an animation (5.) are faaar less noticeable than the ones in (4.), because of the spatial filtering (edge-avoiding à-trous filter), which in my implementation also takes into account the lifetime of a sample (samples that live longer will be filtered less than newly disoccluded samples to avoid unnecessarily smearing/blurring samples with low variance).

Next: Add Nuklear UI to tweak parameters without having to recompile and restart the application and losing previous viewpoints (as is visible in the images - the difference in specular reflection at the top right is not due to filtering but because of different viewpoints causing different Fresnel factors).

Hi guys,

I am looking for a game that was posted here some years ago (at least one).

I can’t remember its name, but some of its story and the game concept:

I think it was top down and had pixel-art.

The game involved a lot of reading and featured many books.

It plays in your deceased father’s house (he was a writer I think) and you had to find some secrets by reading and combining a lot.

I haven’t played it much though, so I don’t know if the place changes, but the main part played only in a few rooms with many bookshelves and your father’s desk.

I hope someone can point me to it

EDIT:

Nevermind, I already found it! It’s here if you’re interested

P.S. I did search the featured, showcase, and WIP section plus searching for “book/s” with the search function before posting. No hate please. ;D

Thanks! All primitives are AABBs. I used Blender to model and preview the scene (all primitives are boxes there, too) and exported as Wavefront OBJ. Fortunately, Blender’s OBJ exporter has the option to declare each mesh/object as a separate ‘o’ object and Assimp uses those ‘o’ declarations to mark individual meshes, so I simply used Assimp to load the OBJ file with all separate meshes/objects, computed each individual min,max coordinates and voilà, those were my AABBs for the raytracer (and also the rasterizer - it’s a hybrid implementation).

Very nice, do you plan to expand your tracer to trace against triangles with acceleration structures?

I have AABBs for every instance in my engine for occlusion culling, maybe you can give me your tracing code, so that I can test it with my engine O:-)

Sure, I plan to release the code to the LWJGL/lwjgl3-demos repository as with all other tutorials/demos there.

As for acceleration structures, not for this demo. For this, I wanted to keep everything as reasonably simple, accessible (current implementation is GL 3.3) as possible, concentrating on the filtering aspect, while still being somewhat interesting and extensible (custom scenes). And without a current acceleration structure the implementation is still real-time on 4K.

But I definitely want to explore BVH/LBVH building on the GPU and maybe switching to triangle meshes in later demos.

But you can see an already working demo with triangles and stack-less kd-tree traversal here which renders the Sponza model (although this is definitely not 4K real-time-capable):

- https://github.com/LWJGL/lwjgl3-demos/blob/master/src/org/lwjgl/demo/opengl/raytracing/tutorial/Tutorial7.java

- https://github.com/LWJGL/lwjgl3-demos/blob/master/src/org/lwjgl/demo/opengl/raytracing/tutorial/KDTreeForTutorial7.java

- https://github.com/LWJGL/lwjgl3-demos/blob/master/res/org/lwjgl/demo/opengl/raytracing/tutorial7/raytracing.glsl

Check it out. An animation DSL! Sorry, Cas.

Now you can say Animation.ROBOT.play(“walk_then_wait”) or (“waiting”) or whatever you want without switching between textures. I also released a cool play button animation:

OZ1AYd6OJ5Y

Very nice! If I had one bit of feedback, I’d turn the ticks you use into something that’s more easily modifiable incase you need to change your Updates per Second in the future. What I tend to do on my projects is something like this:

In the game core:

public static final int UPDATES_PER_SECOND = 30;

In animation code:

animationDelay = UPDATES_PER_SECOND/10; //So a tenth of a second, no matter what the tick-rate is

Of course, your system may already handle that, but I just wanted to write this feedback just in case. Having constants for important aspects of any engine is pretty important, in my opinion.

There’s also a more complex version of this where you can use the timestep to determine how long something should take, but the above easier to remember and more verbose in my opinion.

I do have a constant in the main class that defines the UPS. I don’t plan on changing that, because any lower would mean the game would look choppy and any higher would be too performance intensive. I really appreciate the suggestion though, and that would certainly be the best solution if I were less confident the UPS wasn’t going to be changed.