jar: javavosim.jar

example audio file: vosimexample.ogg (Two jars playing at same time, same pitch, different number of pulses, etc.)

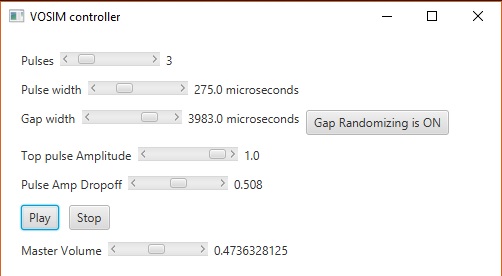

I just programmed a basic working VOSIM synthesizer, or at least, the bare minimum. I was interested in giving it a try as it might prove to be a “cheap” ways to get interesting sounds, especially procedural sound effects. Code for the main unit follows. Each read() outputs one frame of stereo audio data as PCM in the range [-1…1]. The GUI is just minimal JavaFX. There remains much to do to make this into something useful: e.g., fixing the “zippering” when changing settings, and implemented various modulation/interpolation pieces as described in the main paper, as well as packaging this in a sensible delivery system of some sort. (The authors later used something called MIDIM which I have yet to investigate.)

What is VOSIM?

From the Journal of the Audio Engineering Society, JUNE 1978, vol. 25, no. 6.:

VOSIM–A New Sound Synthesis System by W. Kaegi and S. Templaars.

[quote]VOSIM (VOice SIMulation) sound synthesis is based on the idea that by employing

repeating tone-burst signals of variable pulse duration and variable delay, a sound output of

high linguistic and musical expressive power can be obtained. Conventional means such as

oscillator and filter banks were therefore entirely dispensed with, basically simpler apparatus

being used instead: two tone-burst generators and a mixer. Starting with these rudiments, the

aim is an optimal representation of the input data and a progressive application of logical

calculus to the sound-synthesis process.

[/quote]

The audio output is derived from iterating through a series of N “pulses” decreasing in amplitude, followed by a short duration of silence. Together, the pulses and silence constitute a single wave, and the frequency is determined by their combined duration. The “pulses” are made from a sine2 function. Instead of calculating the sine and squaring the value during the playback, I create a cursor that progresses through a LUT of 1024 values. (Only 0…Math.PI radians are needed as input to the sin2 function creating the pulse, and all the audio values are positive.)

I’m not great on the details of the rationale, but it seems having this shape as a pulse creates a fair number of overtones, and the width of this pulse (and the factor by which the pulses decrease in amplitude) determine what they call a “formant”–a region in the spectrum where any waves in it are boosted relative to other frequency components.

AFAIK (guessing) a true pulse would be too rich, probably leading to aliasing or some other problem, and a sine “pulse” wouldn’t have enough harmonics for the “formant” to matter. Will have to experiment to learn more.

The setting I have as the default in the jar is the first one described in the linked article: the vowel /a/ (or at least, a tone with the first format of this vowel).

If you vary the “gap width” (the article refers to this a M), the frequency will change but the formant area will remain at the same frequency region. If you vary the amplitude dropoff factor (the article refers to this as “b”), you can hear the formant area change in frequency. I’m still figuring this out. Changing the pulse count or width (T) also seems to affect the formant frequency location but alters the main pitch at the same time. So for either to be used as a timbre control, a compensating change has to be made with the silent gap width to maintain the wave’s period.

Vowels are said to be recognizable when one can hear the first two formants. This indicates that at least two of these VOSIM generators would be needed to work in tandem to duplicate a vowel. The authors claim musical instruments can be synthesized with three generators (three formants) but I regard this as somewhat dubious. I suspect you might be able to get sounds that “sound like” they have qualities of a particular musical instrument, but nothing that anyone would mistake for the real thing.

Very intriguing is the idea of randomly varying the silent-portion width (M) to create noise with a formant. I haven’t experimented with this yet. This could be a computationally cheap way to get some nice percussion effects or noise-based sound effects. But I could also see that if you go to this much trouble, you might ask yourself: Why not just use a software filter that is easier to understand and control?

import com.adonax.pfaudio.core.PFMixerTrack;

import com.adonax.pfaudio.core.PFPeekable;

import com.adonax.pfaudio.functions.VolumeMap;

import com.adonax.pfaudio.functions.VolumeMapMaker;

import com.adonax.pfaudio.synthParts.SineSquaredTable;

public class VosimPlayer implements PFMixerTrack, PFPeekable

{

private boolean running;

@Override

public boolean isRunning() { return running; }

@Override

public void setRunning(boolean running)

{

if (running)

{

resetVariables();

this.running = true;

}

else

{

this.running = false;

}

}

private float[] synthBuffer;

private int nPulses, curNPulses;

private int gapFrames, curGapFrames;

private int gapFrameCtr;

private float topPulseAmplitude, curTopPulseAmplitude;

private float amplitudeDropoff, curAmplitudeDropoff;

private float pulseIncr;

private float idx;

private boolean inPulses;

private float masterVolume;

private VolumeMap volumeMap;

private boolean updateRequired;

private float[] ampValues;

private int ampValuesIdx;

public void setNPulses(int nPulses)

{

this.nPulses = nPulses;

updateRequired = true;

}

public void setPulseWidth(double pulseWidthMicroseconds)

{

// Convert microsecond amount to a pulse width

// Table is 1024 increments for a single cycle

// 44100 (fps) comes to 44.1 frames per millisecond.

pulseIncr = (float)(1024 / (0.0441 * pulseWidthMicroseconds));

updateRequired = true;

}

public void setMGapWidth(double mGapMicroseconds)

{

gapFrames = (int)Math.round(mGapMicroseconds * 0.0441);

updateRequired = true;

}

public void setTopPulseAmplitude(double topPulseAmplitude)

{

this.topPulseAmplitude = (float)topPulseAmplitude;

updateRequired = true;

}

public void setAmplitudeDropoff(double amplitudeDropoff)

{

this.amplitudeDropoff = (float)amplitudeDropoff;

updateRequired = true;

}

protected volatile float volIncr;

protected volatile int volTargetSteps;

public void setMasterVolume(double targetVolume)

{

volIncr = (volumeMap.get(targetVolume) - masterVolume) / 1024;

volTargetSteps = 1024;

}

public VosimPlayer()

{

synthBuffer = new float[2];

volumeMap = new VolumeMap(VolumeMapMaker.makeX3VolumeMap());

}

@Override

public float[] read()

{

if (inPulses)

{

idx += pulseIncr;

// set up for next pulse?

if (idx >= 1024)

{

idx -= 1024;

ampValuesIdx++;

if (ampValuesIdx >= curNPulses)

{

inPulses = false;

curGapFrames = gapFrames;

synthBuffer[0] = 0;

synthBuffer[1] = 0;

return synthBuffer;

}

}

}

else // in silent gap portion

{

if (gapFrameCtr-- > 0)

{

synthBuffer[0] = 0;

synthBuffer[1] = 0;

return synthBuffer;

}

else

{

resetVariables();

}

}

if (volTargetSteps > 0)

{

volTargetSteps--;

masterVolume += volIncr;

}

synthBuffer[0] = SineSquaredTable.get(idx) * ampValues[ampValuesIdx];

synthBuffer[0] *= masterVolume;

// no panning implemented yet

synthBuffer[1] = synthBuffer[0];

return synthBuffer;

}

private void resetVariables()

{

if (updateRequired)

{

updateRequired = false;

curNPulses = nPulses;

curGapFrames = gapFrames;

curTopPulseAmplitude = topPulseAmplitude;

curAmplitudeDropoff = amplitudeDropoff;

ampValues = new float[curNPulses];

for (int i = 0; i < curNPulses; i++)

{

ampValues[i] = curTopPulseAmplitude *

(float)Math.pow(curAmplitudeDropoff, i);

}

}

gapFrameCtr = curGapFrames;

ampValuesIdx = 0;

inPulses = true;

}

@Override

public float[] peek()

{

return synthBuffer;

}

}

I’m curious if anyone has played around with this. I think that it has been implemented on various synth systems that are more involved.

As always, any suggestions on code improvements are welcome.