Hello, everyone!

I thought I’d write a small long-ass post about the tile rendering system I’ve developed for Robot Farm. The tile rendering in RF ended up being quite complex, as we needed to keep memory usage down and performance up, so this post will chronicle some of the improvements that were made over time. Let’s dive right into it!

Memory management

Our worlds can be quite huge. Our biggest worlds are up to 7680x5120 (= ~40 million) tiles big, and each tile in the world has a terrain tile and a detail tile. In addition, we also need to store pathfinding data for tiles. This can end up being quite a huge amount of data. In the beginning, simply tried to brute-force it by keeping everything in memory. 7680x5120 x (two ints + pathfinding Node object) = over 300MBs just for the tile data, and another gigabyte for the pathfinding data. NOPE, we can’t have that. The first attempt to minimize memory usage was to add chunking to the data. We split up the world up into 32x32 chunks, and only kept a certain number of chunks in memory; the rest were written out to compressed files to the harddrive. This caused a number of issues though. The chunk management suddenly became very complex, and now we needed to involve the harddrive if we needed to load some data. With some unlucky harddrive response times, we could get significant spikes that were very noticeable when travelling quickly. The technique was also pretty hard on the harddrive itself, because we ended up having thousands upon thousands of tiny files for the chunks saved to the harddrive. You could almost feel the lifetime of your harddrive being drained each time you ran the game. >___>

In an attempt to alleviate this, I added “super chunks”. Super chunks were a bundle of 4x4 normal chunks that were loaded and unloaded together. This cut the number of files down drastically and helped the harddrive cope with the constant reading and reduced the amount of stuttering we got due to more data being read at a time. However, it also had some very big drawbacks. The stuttering happened less often, but when it happen the freeze was noticeably longer as more data was read at the same time. In addition, the new system didn’t handle random access very well. Even if just a single tile was needed, an entire super chunk had to be read from disk. We had several world configurations where we blew through the super chunk budget due to NPCs needing tile data for certain operations all around the world, causing thrashing and literally 0.1 FPS cases. Not a fun time. There was also quite a lot of overhead when you just needed to access a tile. Get the super chunk for that tile, get the chunk for that tile, get the tile. In the end, if we wanted to eliminate stuttering completely, we needed to eliminate the harddrive from the equation altogether, and preferably store chunks individually as well.

This is where I hatched the idea of compressing the chunk tile data to RAM instead of to the harddrive. Simply DEFLATE-ing using Deflater or GZIPOutputStream on the data made it much smaller as the terrain tiles are very continuous and the detail tiles are mostly 0 (= nothing there). So, instead of compressing the data and writing the result to disk, I simply compressed the data to a byte[] and kept the byte[] array in memory. To access the data, I need to run it through Inflater again to get the data back, but with no disk access required, this only took around 0.1ms per chunk! Most of the time, this reduced the size of the tile data by a factor of 10x to 20x, making the tile data memory usage trivial. And with the harddrive access gone, there was no reason to keep the super chunks around anymore, so they could simple be removed.

Pathfinding data was another big problem here, but I actually already have a post for that! If you’re interesting in how that was solved, look here: http://www.java-gaming.org/index.php?;topic=38207.0. TL;DR: I got rid of the Node object and managed to barely pack all the pathfinding data of a node into the bits of a 32-bit integer. I then added a separate chunking system to it so that int “nodes” were only allocated (and reused) for areas that the pathfinder explored. This meant that less than 1/4th of the world needed pathfinding data at any single time, and cut the memory usage from over 1GB to just 50MB on average.

Tile transitions

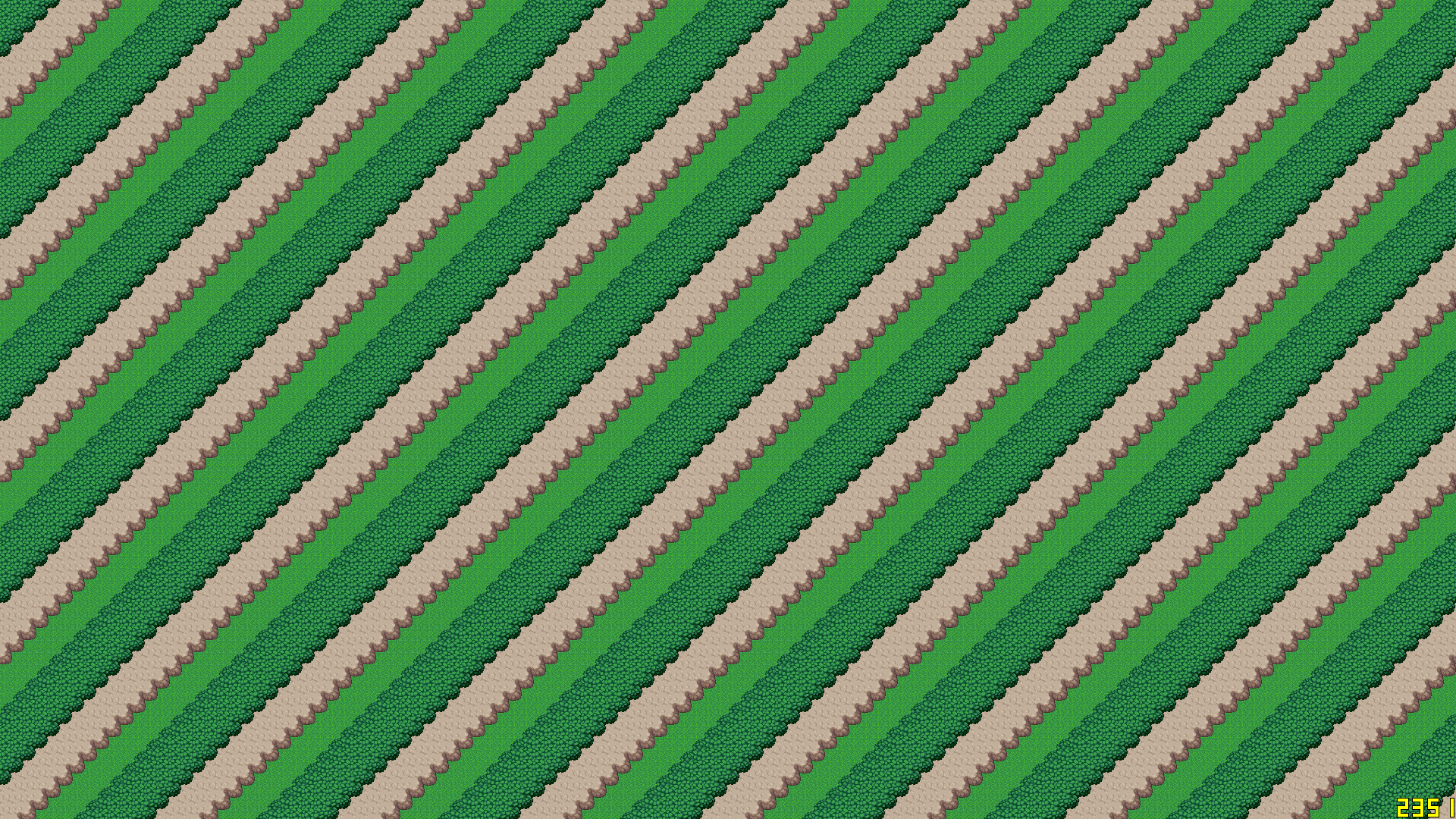

In a tile-based game, tile transitions are almost always a must-have. Take a look at these two image from this very popular tile transition tutorial:

The left one just draws the tiles as they are, while the right one has tile transitions added to the rendering. The tile transitions add, well, transitions between different terrain types so that the world doesn’t look like it’s restricted as much to a grid anymore. However, they’re quite complicated to both make and to render. The transitions to add to a tile depends on all the 8 neighbors of the tile. This leads to 2^8=256 possible combinations, but this can be reduced by combining multiple non-overlapping transitions to cover all cases. Still, a large number of transitions need to be drawn by our pixel artists for every single terrain type. Also, as you can see in the image above, the transitions have to be very thin to not completely occlude the neighboring tile. This limits the “effectiveness” and variation of the transitions.

We already had a transition system in place when I started working on Robot Farm, but it had some very serious problems. First of all, it required some very complicated neighbor checks for multiple terrain types that actually were so slow that we had big CPU performance issues from them when rendering to high resolution screens (as they could see more tiles). I added a couple of hacks to improve performance by caching neighbor tiles between checks, and I even added a full-world BitSet with one bit per tile just to track which tiles needed the transition checks. Even so, we had big problems with overlapping tile transitions each other as the transition tiles we already had were often too big to work well with that system. Hence, I started working with our pixel artist to improve the tile rendering. The project had two goals: Minimize the number of transitions needed (and hence the amount of work for our pixel artist) and maximize rendering performance of those transitions.

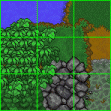

To reduce the number of transitions needed, we needed to reduce the number of tiles that can affect the look of the transitions. Ignoring the diagonal neighbors seemed like a promising idea, but it fell flat as it could not detect certain cases correctly. Instead, I shifted my focus to a brand new idea… Enter half-offset tile transitions!

The idea is pretty simple. Instead of drawing one tile image per tile in the world, we offset the tile rendering by half a tile as the image shows. Suddenly, the rendered tile is no longer a function of 8 neighbors (plus itself), but just the 2x2=4 tiles that it intersects! That’s only 2^8 combination, of which one is nothing (none of the 4 tiles correspond to the terrain type) and one is fully covered, so only 14 transition tiles are needed per terrain type, a big improvement! I haven’t been able to come up with another technique that provide the same quality AND simplicity as this technique!

Even better, this technique is perfect for being rendered on the GPU. First of all, the by cleverly ordering the transitions tiles in the tileset, you can calculate exactly which transition to pick using bitwise operations. Each of the four neighbors can be mapped to the lowest four bits of an integer, giving us the tile transition index we need without any complicated special cases, transition merging, etc! On top of that, we have a clear upper bound on the number of overlapping transitions: four, which happens when every single tile is different in a 2x2 area. This is a GREAT feature because it means that we at most only need to store four tile indices for rendering to be able to handle all cases, while the 8-neighbors version would require up to eight tile transitions.

Rendering

Well, it wouldn’t be like me to not tack on an over-engineered rendering system on top of these two systems now, would it? =P

The old tile rendering system of RF was very simple. Each tile was drawn as four vertices forming two triangles using an index buffer. Very simple, straightforward stuff. Although the biggest bottleneck lied in the rendering of the tile transitions, the generation of the vertex data became a significant cost when zoomed out. Now, this wasn’t a significant issue in the actual game, but it was a huge issue in the tools we had as it made it impossible to zoom out enough to get a decent overview of the world for debugging and development. This lead me back to something I coded a long time ago.

Once upon a time I made this thread here about drawing tiles efficiently using shaders, but the image links are dead now. Anyway, the idea is simply to upload the tile indices to a texture, then use a shader to figure out which tile a pixel belongs to, then which tile index that tile has, then to sample the actual tile. The advantage of this technique is that an entire tile map can be drawn with a single fullscreen quad instead of individual quads for every tile, which is a massive win when the tiles are small and many (possibly in the millions). By using modern OpenGL, this even allows you do have tile mipmaps and even bilinear filtering without any bleeding between tiles too.

To handle the half-offset tile transitions, we need to store up to four tile indices per tile. Hence, a GL_RGBA16UI texture is perfect for the job, giving us up to 65536 possible tiles and fitting all four values in a single texture. To store the the tileset itself, we just use a 2D texture array, where each layer contains a single tile. This ensures that no filtering can happen between tiles as they’re all isolated in their own layers. In the end, a rather short shader can be used to render all tiles at once:

#version 330

uniform usampler2D tileIndices; //"world map" containing tile indices (GL_RGBA16UI)

uniform sampler2DArray tileSet; //tile set, one layer per tile (GL_SRGB8_ALPHA8)

in vec2 tileCoords; //tile coordinates, integers correspond to tile edges

out vec4 fragColor;

void main(){

vec2 halfOffsetCoords = tileCoords + 0.5; //offset tiles by half a tile

vec2 flooredTileCoords = floor(halfOffsetCoords);

uvec4 tileData = texelFetch(tileIndices, ivec2(flooredTileCoords), 0);

vec2 texCoords = halfOffsetCoords - flooredTileCoords;

//texCoords is not continuous at tile edges and causes incorrect derivatives to be calculated

//We can manually calculate correct ones from the original tile coordinates for mipmapping.

vec2 dx = dFdx(tileCoords);

vec2 dy = dFdy(tileCoords);

vec4 result = vec4(0);

for(int i = 0; i < 4; i++){

uint tile = tileData[i];

//use textureGrad() to supply manual derivatives

vec4 color = textureGrad(tileSet, vec3(texCoords, tile), dx, dy);

result += color * (1.0 - result.a); //front-to-back blending

}

fragColor = result;

}

That’s it! Of course, I just had to go one step further though…

Well, our world is too big to be stored entirely in VRAM too. We need to shuffle tile data in and out of VRAM similarly to what we do in RAM by compressing the tile data. This leads to some more complexity. I ended up going completely nuts here and implemented software virtual/sparse texturing. ;D A sparse texture is a texture where not all parts of the texture actually has backing memory. For example, we can split up a 1024x1024 texture into 128x128 texel “pages”, then use a 8x8 “page mapping texture” to map each part of the texture to a different place in memory. As we have fine control over which 128x128 pages are actually available at any given time, we don’t need to have the whole thing in memory permanently. In our case, we have a page size of 128x128 tiles. So for a world that is 1024x1024 tiles big, we split it up into 8x8=64 pages. Let’s say we only have a budget 8 simultaneously loaded pages at a time, which means that our page texture is a 128x128 2D texture array with 8 layers. We then have an 8x8 texture which maps parts of the original texture to pages. This is probably a bit confusing so let me explain it in a different way:

- We want to sample tile (200, 300).

- We calculate the page offset by dividing by the page size: (200, 300)/128 = (1, 2) (rounded down).

- We go to the 8x8 page-mapping texture and sample point (1, 2) to get the layer index for that page. Let’s say the value sampled is 4, meaning the page we’re looking for is in layer 4.

- We calculate the local tile position inside the page, which is (200, 300) - (1128, 2128) = (72, 44).

- We sample the page texture array at (72, 44) on layer (4) to get the tile index we need!

The advantage here is that not all of the 64 pages need to be loaded at any given time. The camera frustum is pretty coherent in its motion and won’t move around much, so if the player is in the top left corner of the map, it’s fine if we only have the top four pages loaded, while the rest simply don’t have any data in them. Hence, we can render the world as if the entire world was loaded all the time, as long as we keep remapping pages to cover the parts of the texture that we actually use at any given time. Some of you may know about the game RAGE which used a much more advanced version of this system to stream in extreme amounts of heavily compressed texture data on demand from a 131 072 x 131 072 virtual texture (128k x 128k), storing parts of this texture (and its mipmaps) into 128x128 pages. In other words, they had a 1024x1024 page-mapping texture (also with mipmaps) which mapped the virtual texture location to individual 128x128 pages. It was fun doing something simple but at least comparable, although probably a bit overkill in this case. =P

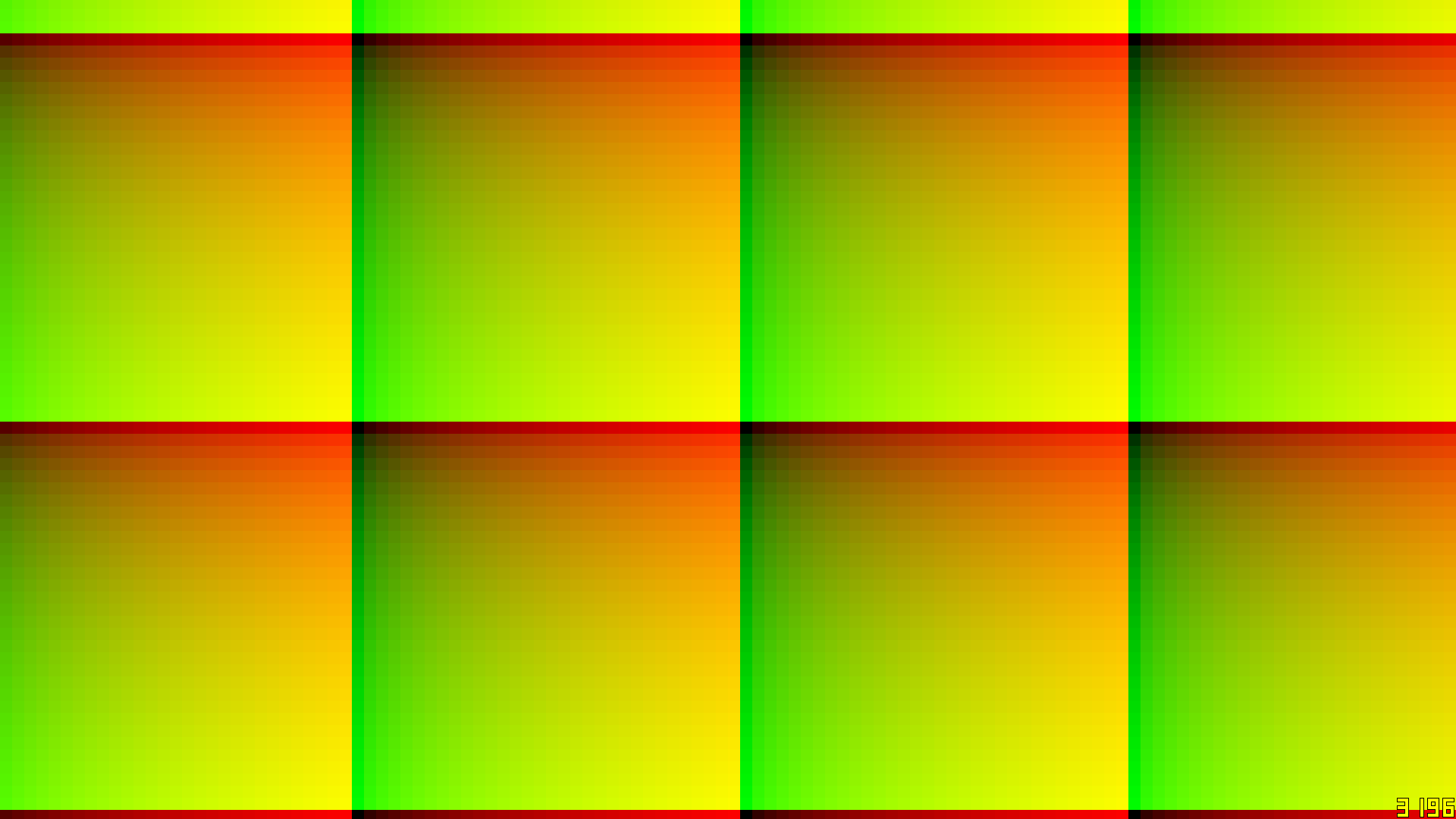

Images

- Page mapping (shows which layer in the page texture array the data for that part of the world is in.

- Page-local positions (local tile coordinates inside each page)

- Tile texture coordinates (the local texture coordinates inside each tile)

- Final result

Extra transition test image