Erm… actually sorry for posting in such a old thread.

But seeing this posted in the Wiki article about all these threads about lighting techneques, I think am the only one who actually implemented something like Terraria’s lighting… And I feel like I’m supposed to explain how I did it.

Implementing the exact same lighting as in terraria is very easy:

public void applyLightRec(int currentx, int currenty, float lastLight) {

if (!isValidPosition(currentx, currenty)) return;

float newLight = lastLight-map.getLightBlockingAmmoutAt(currentx, currenty);

if (newLight <= map.getLight(currentx, currenty)) return;

map.setLight(currentx, currenty, newLight);

applyLightRec(currentx+1, currenty, newLight);

applyLightRec(currentx, currenty+1, newLight);

applyLightRec(currentx-1, currenty, newLight);

applyLightRec(currentx, currenty-1, newLight);

}

What this is doing, is:

- Check whether the current tile position is valid (= inside bounds)

- Calculate a smaller light value according to the “blockyness” of the tile at the position It’s working on

- Stop lighting, if the generated light value is smaller than the light currently at the position, so the light is not overridden

- Apply the lighting, if the above is not the case

- The important part of the recursive method: The self-invokations: Let the method call itself on it’s neighbour positions, with the “newLight” variable used as the “lastLight” argument

This is, btw, called a “recursive flood-fill” algorithm.

The only thing here to implement is the “map”, which is pretty easy:

map.setLight(int x, int y, float light);

and

map.getLight(int x, int y);

is only supposed to set/get a float value in/from some two-dimensional array, or whatever.

map.getLightBlockingAmmountAt(int x, int y);

simply returns some little value, if there is no tile at (x, y), and if there is one, it returns the ammount of “light-blockyness” the tile does. So for example a glass tile would only reduce the light by 0.1, where stone would reduce the light by 0.2, and no tile would reduce the light by 0.05.

Now it should be pretty easy to implement that. But the problem I had with this light, is following:

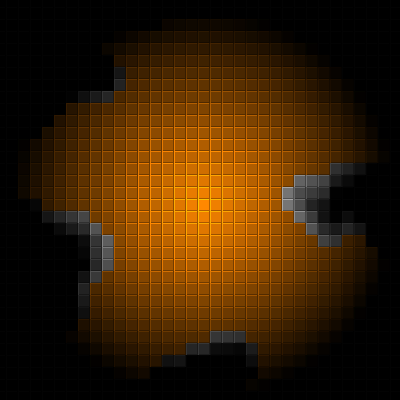

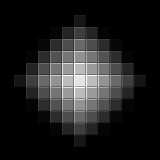

I hated to be the light in a “diamond-shape”:

That pretty much sucked.

The solution was pretty hard to find.

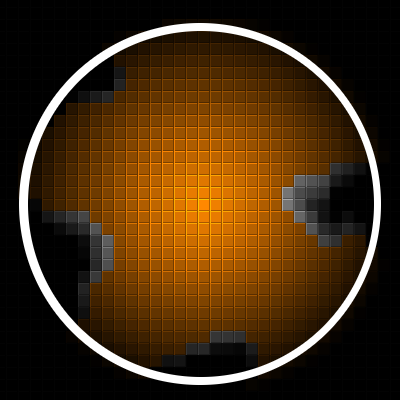

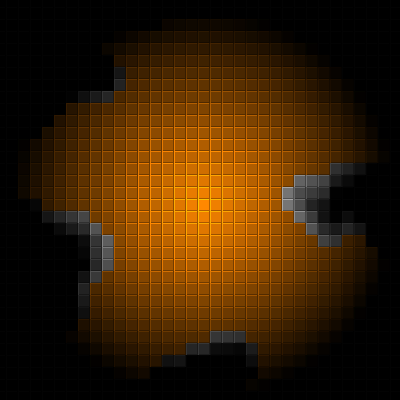

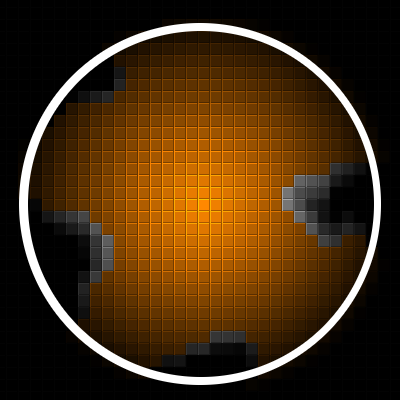

So now we want a very nice-looking round light.

I have not found the perfect solution, but I’m close enough. More at the end.

But now, here is what I did:

I have one little very good helping class, called “RenderedLight”. When thinking about that, the better name would propably be “PreRenderedLight”, because everything this class does, is storing an 2D-array of floats of information about the light.

It’s just like the pre-rendered Textures from davedes for example.

That PreRenderedLight is now perfectly round, due to Pytagoras:

light[x][y] = Math.sqrt(deltax*deltax, deltay*deltay);

(deltax/y are the delta values to the center of the light.)

Now, what we do is we put these two algorithms together (the lookup from the PreRenderedLight and the recursive light method).

public void applyLightRec(int currentx, int currenty, int lightx, int lighty, PreRenderedLight light, float encounteredWallness) {

if (!isValidPosition(currentx, currenty)) return;

encounteredWallness += map.getLightBlockingAmmoutAt(currentx, currenty);

float newLight = light.getLightAt(lightx-currentx, lighty-currenty)-encounteredWallness;

if (newLight <= map.getLight(x, y)) return;

map.setLight(currentx, currenty, newLight);

applyLightRec(currentx+1, currenty, lightx, lighty, light, encounteredWallness);

applyLightRec(currentx, currenty+1, lightx, lighty, light, encounteredWallness);

applyLightRec(currentx-1, currenty, lightx, lighty, light, encounteredWallness);

applyLightRec(currentx, currenty-1, lightx, lighty, light, encounteredWallness);

}

So now we also give the method a PreRenderedLight to use, and a encounteredWallness, which is the sum of all encountered “Wallness” being collected from all the tiles.

the new Light value is then calculated from the lookup from the PreRenderedLight minus the encounteredWallness, to make it look like being stopped from Tiles stopping light.

We now also have a “lightx” and “lighty” argument in this recursive method. We need these to lookup the light values from the PreRenderedLight.

Note, that PreRenderedLight.getLightAt(int x, int y), returns the exact light value at the center, when it’s called with (0, 0), so the values can be relative to the center.

That’s it. Mostly.

It has still has some issues. The more the flood-fill algorithm reduces the light, the more it will look like a diamond:

But since I have no Idea how to improve that, (exept doing raycasting, but thats a whole other story) I will keep it like this.

Though I’m sure this is not the algorithm Starbound is using (I’m still sure they are raycasting  ), this is an improved version of the Terraria flood-fill lighting algorithm

), this is an improved version of the Terraria flood-fill lighting algorithm

thank you, that what i need. Now I only need to … yeah… cast rays

thank you, that what i need. Now I only need to … yeah… cast rays

), this is an improved version of the Terraria flood-fill lighting algorithm

), this is an improved version of the Terraria flood-fill lighting algorithm