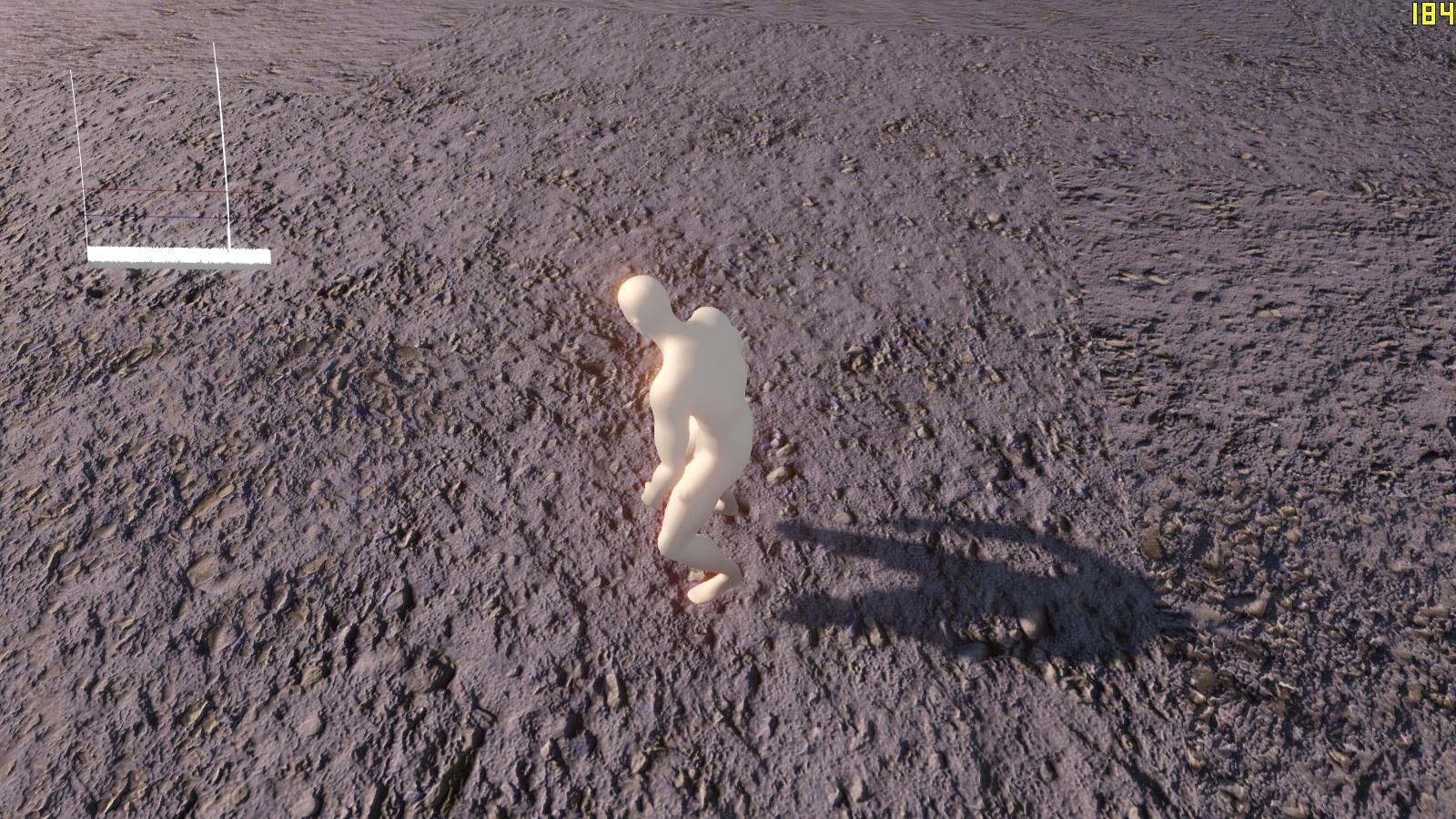

I’m having trouble finding a proper depth bias for my meshes (the comparison value in the following snippet)

float sampleShadowMap(vec2 texcoords, float comparison)

{

vec4 depth_val = texture2D(shadow_map, texcoords);

return depth_val.z < comparison ? 0.0 : 1.0;

}

here’s how I calculate it now

vec4 shadow_remove_persp;

shadow_remove_persp.x = shadow_coord.x / shadow_coord.w;

shadow_remove_persp.y = shadow_coord.y / shadow_coord.w;

shadow_remove_persp.z = shadow_coord.z / shadow_coord.w;

shadow_remove_persp.w = shadow_coord.w / shadow_coord.w;

float depth_bias = shadow_remove_persp.z - (10.0 * model_scale / shadow_map_size);

and it works great, sometimes. Is there really not a way to calculate a good depth bias without just guessing?