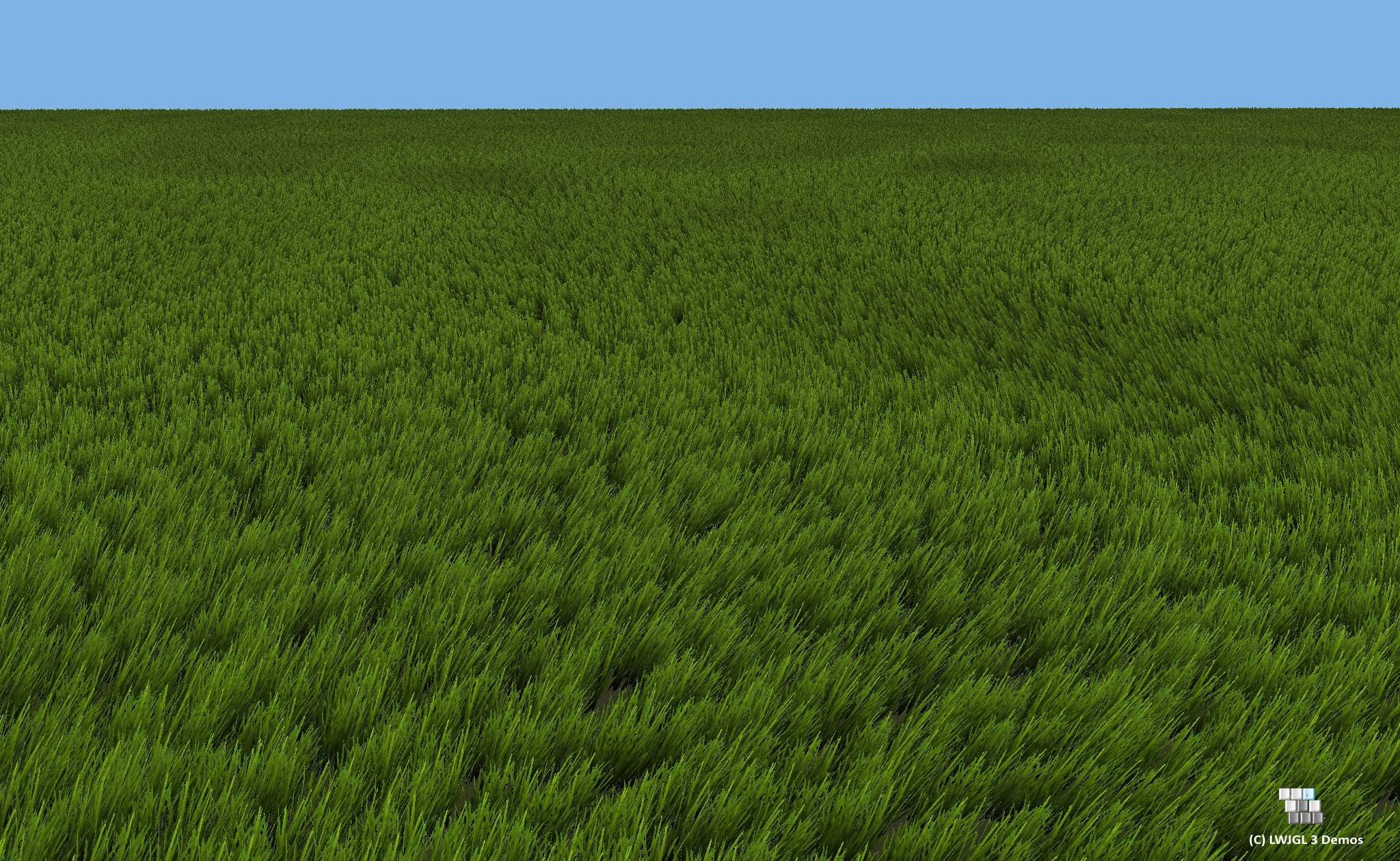

For rendering grass over some terrain let’s start getting to a good solution by writing down some basic assumptions/constraints:

- the whole meadow is composed of tens of thousands of grass “patches”

- each grass patch is made of the same geometry/model, just at different positions and with some rotation

- once each patch is put on the ground at a given position it stays there and does not move

- each path has probably the ability to “wave” with the wind (that is, its upper vertices can be displaced)

As usual, you have the following constraints to achieve good performance with OpenGL:

a) you want to minimize the number of draw calls (that is, the number of glDraw*() calls and, god forbid if you are doing it the number of glBegin/glEnd calls)

b) you want to minimize the number of actual vertices being drawn, including omitting vertices not visible to the user

c) you want to minimize the dynamic update of buffer data at each render loop cycle

Given constraint 0, 1 and 2 the optimal solution would probably be to fill a whole VBO with all pre-transformed world-space grass patches and then simply do a single (indexed) draw call. This would allow to optimize constraint a) and c) to the fullest, but would keep b) suboptimal, since you would also render grass patches not visible to the camera.

To optimize for b) we could start clustering grass patches. That is, we do not store all grass patches in a single buffer, but spatially divide them into multiple buffers. Then during rendering we can do simple frustum culling to decide whether one cluster is visible and draw them with a draw call.

Here you can also unpack a host of spatial acceleration data structures to do the culling most efficiently.

You might also consider OpenGL hardware instancing (google for “OpenGL instancing” and read the first 5-6 search results). You’d achieve a significantly smaller overall memory footprint when using it, because you’d only have to store the following information:

- the geometry information of a single grass patch (with instance divisor 0)

- the world-space position for each grass patch (with instance divisor 1)

- the 2x2 rotation matrix for each grass patch (with instance divisor 1)

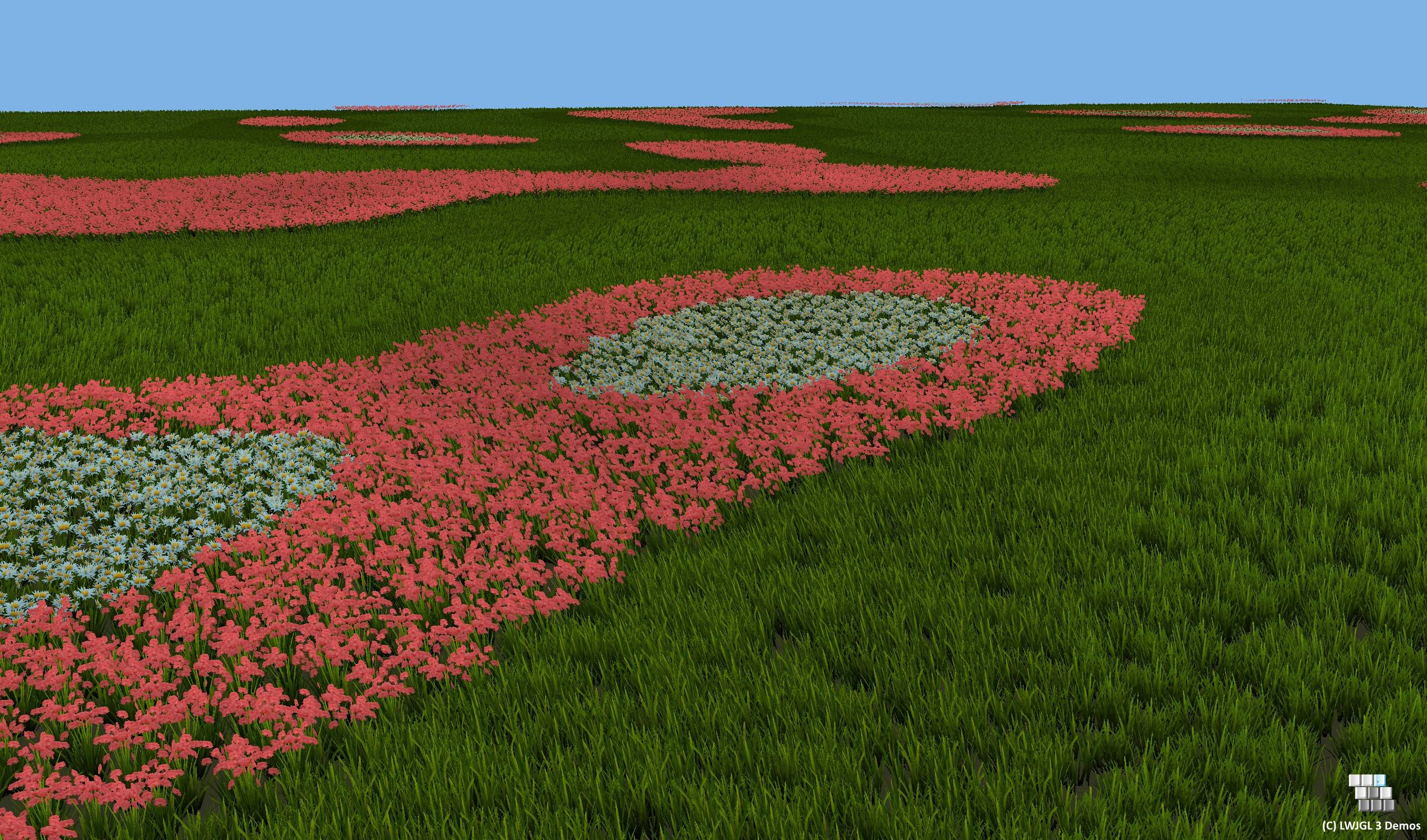

Why do we need a 2x2 rotation matrix now? Because each grass patch should be able to rotate randomly so as to reduce the appearance of uniformity in the grass patches on the terrain. (much like what is presented in this GPU gems article)

In the non-instanced variant described above, we do all positioning and rotation by pre-transforming all vertices into world-space and then submitting them to the buffer object.

When using instancing we cannot do that anymore, because we are back at model-space. So, we need a way to represent rotation. We could get away with a single rotation angle, but that would then require to evaluate sin/cos in the shader. This however would hurt performance more than storing 3 additional float values for the 2x2 matrix, in which that sin/cos calculation has already been done once on the CPU.

I’d start with implementing the “put all grass patches pre-tranformed into a single VBO and render them using a single draw call” method. I’ve implemented both approaches some eight years ago or so, and in both ways blending/overdraw was the main bottleneck in the end with rendering grass patches with alpha blending or with alpha discard.

If you are more into instancing, read this interesting article first, of which the “Extensions - ARB_instanced_arrays” is the method I suggested here.