Font support seemed like it would be tricky to implement in such a small space, however after discussion on IRC someone pointed out that its pretty easy using a really small texture and without AWT. So here is my attempt.

1) Drawing the font

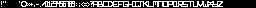

The smallest power of 2 texture that I thought would fit here is 256x8, so using paint I drew a basic monochrome font. All letters have a size of 5x6 and all other symbols before the letters use a size of 3x6.

The font char’s are kept in the same order as the values of the java char’s so that its easier to map them directly using char values and require minimal code. These values were obtained using:

for (int i = 0; i < 100; i++) System.out.println("I: " + i + " : " + (char)i);

This also allows one to see what range you need and what to put in the bitmap. The font is also kept on a single line to further simplify the code when calculating texture values (so you only need to worry about x value and not y).

After condensing the above by removing excess spaces, I ended up with a 256x8 image:

The font is white so that it can be easily coloured in using opengl.

2) Storage

The PNG is pretty small, however I didn’t want the overhead of storing and decoding the PNG. Since the image only uses black and white pixels, each one can be represented by a single bit, and since its 256x8 in size, it would require just 2KB to store as a bytes, which can then be converted to a String and stored in the code. Zip/LMZA will then do its magic on this highly compressible String when packaging.

3) Implementation

MatthiasM’s PNGDecoder is used to load the image into a ByteBuffer, which is then converted into a BitSet, then into a byte[] and finally into a String to store it in code (had some trouble with the last step, so just used the Base64 encoder).

The Image to String code will output the String that you can store and looks like the following:

import java.io.FileInputStream;

import java.io.IOException;

import java.io.InputStream;

import java.nio.ByteBuffer;

import java.util.BitSet;

import javax.xml.bind.DatatypeConverter;

public class ImageToString {

private void loadTexture(String file) throws IOException {

InputStream in = new FileInputStream(file);

try {

// use PNGDecoder to load texture into a ByteBuffer

PNGDecoder decoder = new PNGDecoder(in);

ByteBuffer byteBuffer = ByteBuffer.allocateDirect(4 * decoder.getWidth() * decoder.getHeight());

decoder.decodeFlipped(byteBuffer, decoder.getWidth() * 4, PNGDecoder.Format.RGBA);

byteBuffer.flip();

// convert ByteBuffer into BitSet

BitSet bits = new BitSet(256*7);

int count = 0;

for (int i = 0; i < byteBuffer.capacity()-256*4; i += 4) {

byte b = byteBuffer.get(i);

if (b == -1) bits.set(count);

count++;

}

// convert BitSet into byte[]

byte[] bytes = new byte[bits.length() / 8 + 1];

for (int i = 0; i < bits.length(); i++) {

if (bits.get(i)) {

bytes[bytes.length - i / 8 - 1] |= 1 << (i % 8);

}

}

// convert byte[] into base64 and output

String s = DatatypeConverter.printBase64Binary(bytes);

System.out.println(s);

} finally {

in.close();

}

}

public ImageToString() {

// load texture and output it to base64

try {

loadTexture("res/font.png");

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

new ImageToString();

}

}

Which gives the following output:

fjGMY//9///+HP/x//7/v/wAB//7+uAAA8gAKgAAAABiP4xiQMUxjGoWkJEIQ5CmMIgFsJsjsAAGaAAqAAAAADIkjGJAxTGMahOQkQhDEKY5dJfz//q4AKwgAAIAAAAAG/+u4k/9P4xqFpCf698Q/+oCBLLJCqxx1CAAAgAAAAAOEauiSDUhjGIclJGIQ5DGIXSUsskKpASuYAAAAAAAAH/x+T5P5+H8Y/if8fh+//4oiIfz+f/hBAPAAAIAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA==

You can embed the above String and then at runtime you just need to use the Base64 decoder to get the bytes, put the bytes into a BitSet and then create a ByteBuffer out of it which can be loaded as a 256x8 texture.

Once you have the Opengl Texture loaded, its a simple matter of parsing and drawing the text. Do remember to use GL_NEAREST since the font is so small and we don’t want any filtering or blurring. This will give us a nice retro looking resizeable font which can easily be coloured.

I’m pretty sure there is room for improvement and hopefully someone will point out how it can be improved (likely with the byte[] to String step).

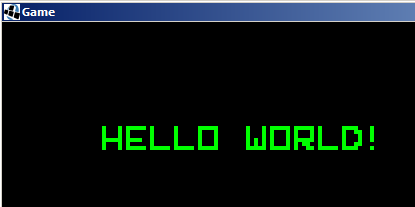

Lastly here is the full FontTest code, which should display the text “Hello World!”, hopefully someone will find it useful.

import java.nio.ByteBuffer;

import java.util.BitSet;

import javax.xml.bind.DatatypeConverter;

import org.lwjgl.BufferUtils;

import org.lwjgl.LWJGLException;

import org.lwjgl.opengl.Display;

import org.lwjgl.opengl.DisplayMode;

import static org.lwjgl.opengl.GL11.*;

public class FontTest {

String textureData = "fjGMY//9///+HP/x//7/v/wAB//7+uAAA8gAKgAAAABiP4xiQMUxjGoWkJEIQ5CmMIgFsJsjsAAGaAAqAAAAADIkjGJAxTGMahOQkQhDEKY5dJfz//q4AKwgAAIAAAAAG/+u4k/9P4xqFpCf698Q/+oCBLLJCqxx1CAAAgAAAAAOEauiSDUhjGIclJGIQ5DGIXSUsskKpASuYAAAAAAAAH/x+T5P5+H8Y/if8fh+//4oiIfz+f/hBAPAAAIAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA==";

int textureID;

public void start() {

try {

Display.setDisplayMode(new DisplayMode(800, 600));

Display.create();

} catch (LWJGLException e) {

e.printStackTrace();

System.exit(0);

}

// init OpenGL here

glViewport(0, 0, 800, 600);

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

glOrtho(0, 800, 600, 0, 1, -1);

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

glEnable(GL_TEXTURE_2D);

// enable blending so the black parts of font are transparent

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

// load out string into a texture

textureID = loadTexture();

while (!Display.isCloseRequested()) {

glClear(GL_COLOR_BUFFER_BIT);

glColor3f(0, 1f, 0); // set green font color

drawString("HELLO WORLD!", 100, 100, 4);

Display.update(); // update, will display what you rendered above

Display.sync(60); // cap to 60fps

}

Display.destroy();

}

public void drawString(String text, float x, float y, float fontSize) {

glBindTexture(GL_TEXTURE_2D, textureID); // bind our texture

float offset = 0;

for (int i = 0; i < text.length(); i++) {

int c = text.charAt(i) - 33;

// if not within supported char range skip and add space

if (c < 0 || c > 57) {

offset += 5 * fontSize + fontSize;

continue;

}

int w = 3;

int subtract = 0;

if (c > 31) {

w = 5;

subtract = 31 * 2 + w;

}

float width = w * fontSize;

float height = 8 * fontSize;

float texStart = 1f/256*(w*c-subtract);

float texEnd = texStart + 1f/256*w;

glBegin(GL_QUADS);

glTexCoord2f(texStart, 1);

glVertex3f(x + offset, y, 0);

glTexCoord2f(texEnd, 1);

glVertex3f(x + width + offset, y, 0);

glTexCoord2f(texEnd, 0);

glVertex3f(x + width + offset, y + height, 0);

glTexCoord2f(texStart, 0);

glVertex3f(x + offset, y + height, 0);

glEnd();

offset += w * fontSize + fontSize;

}

}

private int loadTexture() {

// convert base64 string to byte[]

byte[] bytes = DatatypeConverter.parseBase64Binary(textureData);

BitSet bits = new BitSet();

// convert byte[] to BitSet

for (int i = 0; i < bytes.length * 8; i++) {

if ((bytes[bytes.length - i / 8 - 1]&(1 << (i % 8))) != 0) {

bits.set(i);

}

}

ByteBuffer textureData = BufferUtils.createByteBuffer(4 * 256 * 8);

// fill texture from bits

for (int i = 0; i < 256*8; i++) {

for (int j = 0; j < 4; j++) { // 4 bytes (needed for each component of RGB)

if (bits.get(i)) textureData.put((byte)-1);

else textureData.put((byte)0);

}

}

textureData.flip();

int textureID = glGenTextures(); // generate new texture id

glBindTexture(GL_TEXTURE_2D, textureID); // bind our texture

// make sure you use gl_nearest as we don't want the texture stretching or blurred

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, 256, 8, 0, GL_RGBA, GL_UNSIGNED_BYTE, textureData);

return textureID;

}

public static void main(String[] argv) {

FontTest fontTest = new FontTest();

fontTest.start();

}

}

And the output/result should look like: