Hi!

I am working on my project where I render a very, very large terrain. 40 000 square kilometres. Or about 25 000 square miles. That’s the size of Southwestern Ontario and then a bit. Or about 1/6 the size of the United Kingdom.

Since this is so massively huge, I obviously couldn’t just render it and stick it in a display list. This is because that would take absolutely forever, and would have to do that every time the terrain is changed. That may be a lot, so that is out of the question.

I’ve decided to take the same approach as Minecraft and many other games, by dividing up the terrain into manageable chunks. I then render one of these every frame or so, and store that in a display list. That works really, really well. Up to a point. I was trying to make it so that I could see the entire terrain at once, in a sort of overview mode. I sat and watched the chunks draw and load, and it was fantastic. I still have some work to do with the lighting and the normals, but it looks good.

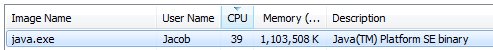

And then this happened.

That was just the beginning. It spiked to 99% of my CPU usage, and it actually made my sound stop working and my wacom tablet driver to freeze and stop responding. I decided that that wouldn’t make a very good point in a feature list.

And after some fiddling, that brings me here. Asking how any of you managed to wrangle using large terrains. I know that I could just do some trig and such to find out how many chunks are displayed at the current view level and bla bla bla, but I want to see if there are any additional things that I could do, because I want the rendering and graphics to be extremely fast, because the logic is most likely going to be very CPU intensive.

So if you have any ideas or solutions for me, just let me know!

-Jacob