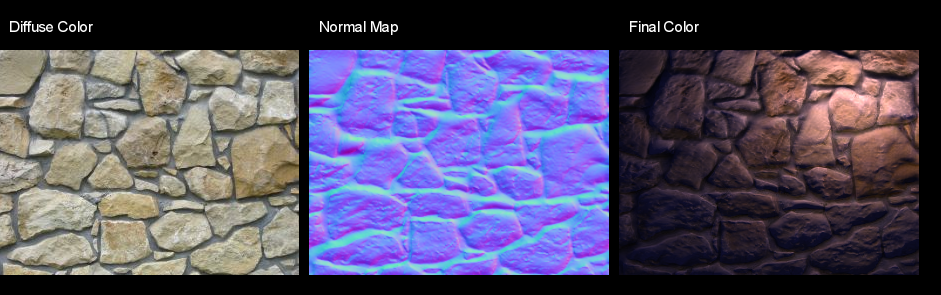

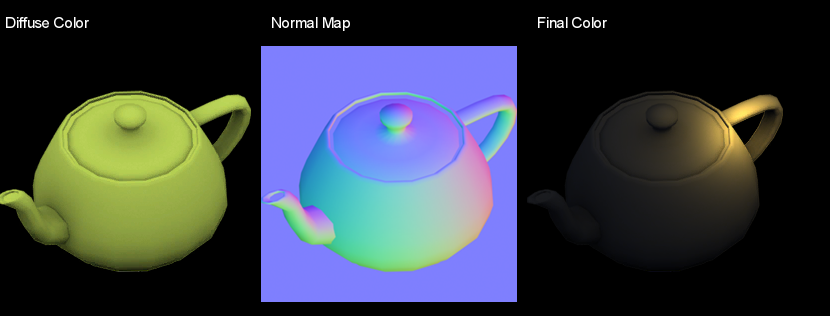

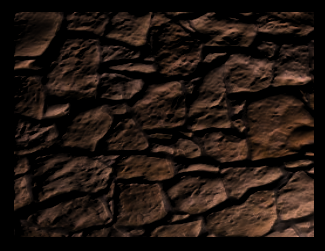

Result:

An image doesn’t really do it justice, so try out the LibGDX demo here:

http://www.mediafire.com/?ak4a5oso4cctmw8 (5.4 MB fat jar – simply double-click)

The illumination model seems to be a very complicated thing at first glance, but it’s actually really relatively simple mathematics. For more reading, see here.

The entire source of the LibGDX demo can be found here (excuse the messy code). The images used:

rock.png

rock_n.png

teapot.png

teapot_n.png

The application is very basic: it renders a quad with two active texture states, which are sampler2D uniforms in the fragment shader. The parameters are all uniforms for simple debugging purposes; although for performance you may not want to do this in practice.

The basic equation:

N = normalize(NormalColor.rgb * 2.0 - 1.0)

L = normalize(LightDir.xyz)

Diffuse = LightColor * max(dot(N, L), 0.0)

Ambient = AmbientColor * AmbientIntensity

Attenuation = 1.0 / (ConstantAtt + (LinearAtt * Distance) + (QuadraticAtt * Distance * Distance))

Intensity = Ambient + Diffuse * Attenuation

FinalColor = DiffuseColor.rgb * Intensity.rgb

The GLSL fragment shader. Could probably be cleaned up a little, and the booleans/yInvert are of course only there for test purposes.

#ifdef GL_ES

precision mediump float;

#endif

varying vec4 v_color;

varying vec2 v_texCoords;

uniform sampler2D u_texture;

uniform sampler2D u_normals;

uniform vec3 light;

uniform vec3 ambientColor;

uniform float ambientIntensity;

uniform vec2 resolution;

uniform vec3 lightColor;

uniform bool useNormals;

uniform bool useShadow;

uniform vec3 attenuation;

uniform float strength;

uniform bool yInvert;

void main() {

//sample color & normals from our textures

vec4 color = texture2D(u_texture, v_texCoords.st);

vec3 nColor = texture2D(u_normals, v_texCoords.st).rgb;

//some bump map programs will need the Y value flipped..

nColor.g = yInvert ? 1.0 - nColor.g : nColor.g;

//this is for debugging purposes, allowing us to lower the intensity of our bump map

vec3 nBase = vec3(0.5, 0.5, 1.0);

nColor = mix(nBase, nColor, strength);

//normals need to be converted to [-1.0, 1.0] range and normalized

vec3 normal = normalize(nColor * 2.0 - 1.0);

//here we do a simple distance calculation

vec3 deltaPos = vec3( (light.xy - gl_FragCoord.xy) / resolution.xy, light.z );

vec3 lightDir = normalize(deltaPos);

float lambert = useNormals ? clamp(dot(normal, lightDir), 0.0, 1.0) : 1.0;

//now let's get a nice little falloff

float d = sqrt(dot(deltaPos, deltaPos));

float att = useShadow ? 1.0 / ( attenuation.x + (attenuation.y*d) + (attenuation.z*d*d) ) : 1.0;

vec3 result = (ambientColor * ambientIntensity) + (lightColor.rgb * lambert) * att;

result *= color.rgb;

gl_FragColor = v_color * vec4(result, color.a);

}

At a later point I may go into more details as to how this all works (targeting newbies) and how it could be implemented in a practical way.

EDIT: Updated based on advice from theagentd and MatthiasM.