This thread was created to migrate over the replies from the thread “What I did today”

@KaiHH I don’t mean to subvert the topic of this thread, but I’m also using java (Jmonkey) and happened to come across this this particular post. My question is regarding the GL_POINT method as outlined in the paper “A Ray-Box Intersection Algorithm and Efficient Dynamic Voxel Rendering”. I seem to be running in an issue with GL_POINTs that are at a distance, say >30 world units away. The issue is that when moving or rotating the camera the ray-traced voxel seems to shift near the edges of the GL_POINT billboard.

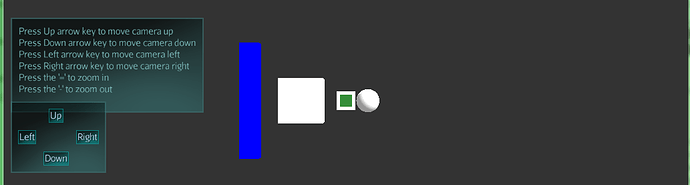

Attached are the use case that shows the issue I’m encountering:

The center green cube is ray traced and I have added a white background to better visualize the issues.

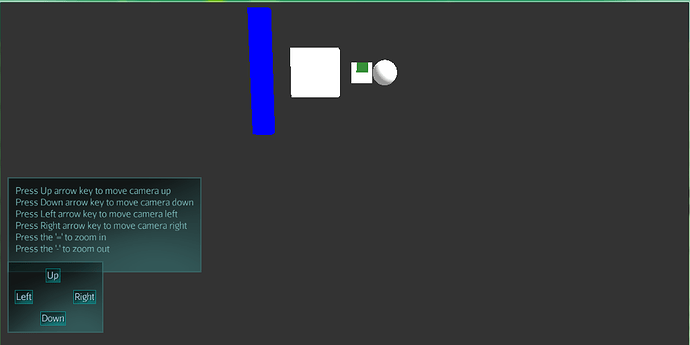

Here is the result when I rotate the camera downward:

The green cube seems to have shifted near the top edge of the point billboard.

It seems as if this issue only seems to occur when voxels are far away.

Here is the way I’m currently generating the ray in my fragment shader:

vec3 getRayDir(vec3 camPos, vec3 viewDir, vec2 pixelPos) {

vec3 camRight = normalize(cross(viewDir,vec3(0.0, 1.0, 0.0)));

vec3 camUp = normalize(cross(viewDir,camRight));

return normalize(pixelPos.x*(camRight) + pixelPos.y*camUp + CAM_FOV_FACTOR*viewDir);

}

void main(){

//camPos and camTarget are passed in through material parameters

//camTarget is the forward vector of the camera, the formula I use to get the forward is: camPos + (normalize(g_CameraDirection)*1.0);

vec2 p = (-resolution.xy + 2.0*gl_FragCoord.xy) / resolution.y;

//ray origin

vec3 ro = camPos;

//ray direction

vec3 rd = getRayDir(camPos,normalize(camTarget - camPos), p);

}