You’re not alone in this problem. I’m fairly certain you’re running up against the exact problem I encountered, because I built my own objects for handling matrices and vectors.

Also like you, I figured arrays would be the most natural way of handling this. Now you can still do it with arrays if you really want, but here’s what I found:

Arrays are objects, so every time you create one of those, you end up with the potential for garbage. This problem is compounded when you do things like set the Matrix by using a new float[] or have it return a new float[].

You may be wondering how you handle passing around matrices and stuff without referencing the original matrix. I mean if you pass a matrix to a method and have it mangle the bastard, it’s going to destroy the original copy you wanted to keep, since Java behaves like objects are pass by reference (pedantic note: My understanding is Java is considered pass by value).

The answer comes from a seemingly unlikely place. Since Java handles objects like references and primitives as values, it’s actually easier to to set your Matrices and Vectors to contain primitives. For me it felt like this goes against what you’re taught in programming 101. But like everything with code, it’s always subjective to the what you need it for.

So here’s what I did:

I changed my matrices to be something like

public float c00 =1; public float c10 =0; public float c20 =0; public float c30 =0;

public float c01 =0; public float c11 =1; public float c21 =0; public float c31 =0;

public float c02 =0; public float c12 =0; public float c22 =1; public float c32 =0;

public float c03 =0; public float c13 =0; public float c23 =0; public float c33 =1;

And of course the same thing as with the vectors.

Now you can do all the multiplication and what not by passing matrices into other matrices and then do your operation using the primitives.

Here’s an example of setting a matrix from another matrix

public void setMatrix(Matrix4 matrix){ //Set the matrix

this.c00 = matrix.c00; this.c10 = matrix.c10; this.c20 = matrix.c20; this.c30 = matrix.c30;

this.c01 = matrix.c01; this.c11 = matrix.c11; this.c21 = matrix.c21; this.c31 = matrix.c31;

this.c02 = matrix.c02; this.c12 = matrix.c12; this.c22 = matrix.c22; this.c32 = matrix.c32;

this.c03 = matrix.c03; this.c13 = matrix.c13; this.c23 = matrix.c23; this.c33 = matrix.c33;

}

This way you avoid linking two matrices together by reference. The above method will create an entirely new set of values from the matrix which was passed in.

This actually turns out to be quite fast. I was a little concerned that using primitives like this would eventually lead slowing things down. So I did some testing and it turns out Java is very fast at setting primitive values. And since you’ll only handle up to 16 values, you’re not losing out by skipping arrays.

It also makes accessing individual cells kind of nice.

Conclusion:

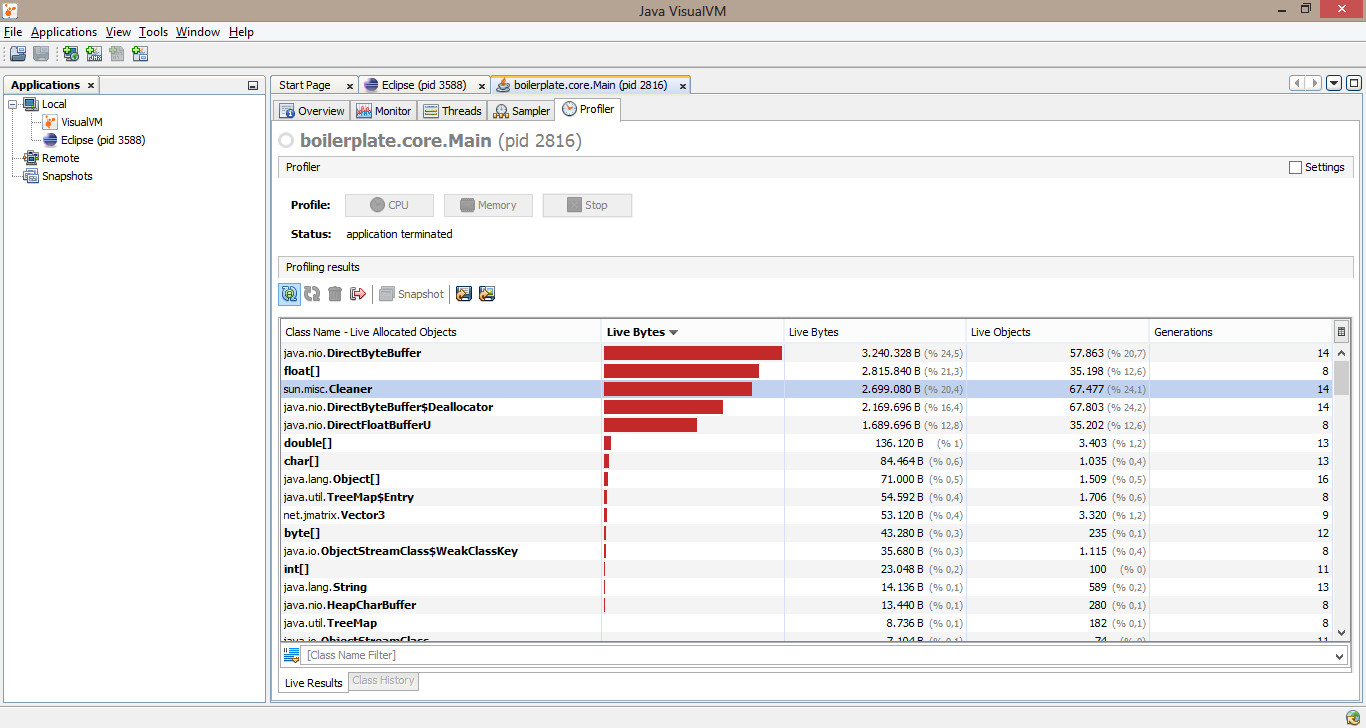

I started down the rabbit hole of correcting my math, because I encountered the stuttering effect due to garbage collection. Before I fixed everything, I noticed my used memory was accumulating faster than an ex-wife could amass debt with her ex-husbands credit card. It was knocking over hundreds in seconds.

Now that I’ve cleaned the math up and reused objects like matrices, it takes a couple seconds before I even see it kick over 1 MB. I imagine you’ll encounter the same.

And like the others have said before me, reusing your Buffers will also go a long way.

In the end, I think most of us go through this at one point or another. So don’t let it get you down.