Greetings, everyone. This guide is here to teach you all about gamma correction and why you need to take it into consideration. Let’s get started!

Background

So, what is gamma correction/encoding/decoding? The idea of gamma encoding came from trying to optimize the memory usage of images. The key observation was that a simple linear representation, where 0.0 was black, 1.0 was white and 0.5 was the exact middle between black and white, was not enough to store an image with 8 bits per channel due to banding at low values, while the values close to 1.0 suffered no visible banding at all since human eyes are much less sensitive to changes in light intensity at high values. In other words, there was an excess of precision close to 1.0 and insufficient precision around 0.0. To solve this, you can apply a function to the intensities to try to redistribute the precision. If we for example apply the square root function on each value, then 0.5 gets increased to 0.707. Using 8 bits for each color (0 to 255), that means transforming 127 all the way up to 180. In other words, for the darker colors we have 180 unique values, while for the bright values we only have 75. Hence, the general approach of gamma encoding is to take the 0.0 to 1.0 color values and just run them through a power function x^g, where g is the gamma value (0.5 in the previous example). To do gamma encoding, we run it through x^(1/g) to get the original value back again, since (x^g)^(1/g) = x^(g*1/g) = x^1 = x.

In a sheer coincidence, it turns out that old CRT monitors don’t have a linear response to the voltage applied to the electron gun in them. For most electron guns, the color intensity that was produced based on the voltage x applied to them follows a non-linear curve: x^1.8 to x^2.2. Hence, if we encode our images with gamma 1/2.2, we end up cancelling out the non-linear response of the electron gun and get a linear result like we want, while getting all the precision redistribution benefits of gamma encoding. For gamma 1/2.2, 127 is brought up to 186, giving us 186 values between 0.0 and 0.5, and 69 values between 0.5 and 1.0. Perfect! Now, we don’t have CRT monitors anymore, but today’s monitors and TVs still follow a gamma of around 2.2. They often use hardware look-up tables that convert an 8-bit color intensity to the voltage to apply to each pixel to achieve this.

Why should I care? I’ve never seen anything look wrong?

Indeed, if you load in an image file from your computer and just display it, you will get a 100% gamma correct result. Why? Because all major image formats (JPG, PNG, BMP, even XMI) are all stored with in the sRGB color space, which is very close to gamma 1/2.2 to 1/2.4. If you simply take the image, load it into Java2D/OpenGL/Vulkan/whatever, you are taking a gamma 1/2.2 image and displaying it on a gamma 2.2 monitor, which cancels out, so why do you need to care? A major problem occurs when we try to manipulate the colors in the image. As we already know, a color intensity of 0.5 corresponds to 186 in gamma 1/2.2. Hence, half of 255 is 186, not 127. As soon as you start multiplying, scaling or adding together colors, you need to take the gamma into account!

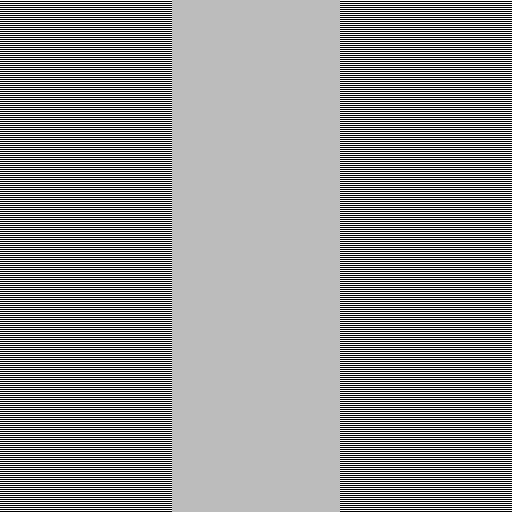

Here’s a simple proof that gamma correction is required for correctness. Make sure that you view these pictures at their exact resolutions without any scaling (i.e. not on a phone). Take a step back so that the lines on the side of the pictures look like a single solid color. Here’s the version WITHOUT gamma correction.

Note that the middle actual solid color block is 127, but looks much darker than the “physical” true gray you see from by looking at the alternating black/white lines on the side from a distance. However, if you gamma correct the center…

Now, that’s much closer to what you would expect! That’s also 188! It probably doesn’t look exactly like the side bars, depending on your monitor and its settings, but it gives you a good idea on why we need gamma correction to get correct results!

OK, so my game’s colors are incorrect, but they still look good?

True, not doing gamma correction will give you significantly darker gray values and give a deeper look, but it’s not physically accurate. If you’re aiming for realism, you will not be able to get realistic results (or even results that make sense at all) without taking gamma into account. For example, a linear gradient will look more pleasant without gamma correction as the color intensity basically gets squared. With gamma correction, most of the gradient will look white as our eyes aren’t as sensitive at high light intensities. It’s important not to mix up accuracy and how good something look.

Fair enough, how do I make my graphics gamma correct?

To be able to scale a value or blend together two values correctly, we must convert the sRGB values to a linear color space, apply the changes we want, then back again to sRGB so that they are displayed correctly. Graphics cards actually have hardware for doing this for us.

The first one is related to how textures are handled. If we were to decode sRGB (pretty close to applying x^2.2 to each channel) when we loaded a texture from file, we absolutely destroy the precision of the values if we attempted to store the result in a simple 8-bit texture. For example, all values in the image that are darker than 14 would all map to 0, completely devastating the precision for the blacks. Then when we encode back to sRGB before displaying the result to the screen, we end up undoing this transformation again, but the damage is already done. We’ve lost the additional precision that sRGB gives where it matters and given it to whites instead! Luckily, there is hardware to handle this! OpenGL 2.1 hardware has support for loading the raw sRGB data into a texture and have the GPU do the conversion for us! What does this mean? It means that when our shader samples 186 from the sRGB texture, it will instead return 0.5 from texture()! Similarly, if it samples a 1, it will be converted to around 0.000015, a VERY small number. Even better, it will also perform texture filtering correctly in linear space. In other words, we have massively improved precision of blacks where we need it! To get the same precision for blacks with a linear color space texture, we’d need at least a 16-bit texture!!!

Great! So we’ve managed to get high precision linear color values into our shader! If your game/graphics engine uses HDR render targets (16-bit float textures), you’re free to use linear colors (read: what you’re already using) in your entire engine from now on and not have to worry about gamma at all. At the end when you copy the result to the framebuffer, just apply an sRGB/gamma transform to convert it back to sRGB/gamma color space to compensate for the monitor’s gamma curve and you’re done!

However, not all games want to use high-precision render targets for memory usage or performance reasons. There may simply not be a need for it. In this case, we get the same problem as above when we write our linear color values to a simple 8-bit texture, destroying the precision. When we later convert our values back to sRGB for displaying, we amplify up the precision loss again and get horrifying banding. Luckily, there is a second hardware feature you can take advantage of, this time in OpenGL 3.0 hardware. This feature allows you to seamlessly use sRGB textures as render targets without having to worry about any conversions yourself. By enabling GL_FRAMEBUFFER_SRGB, the hardware will make sure to do all the conversion for you. When you have an SRGB texture assigned to the framebuffer object you’re rendering to and GL_FRAMEBUFFER_SRGB, the hardware will:

- Convert the linear color values you write from your shader to sRGB space before writing them to the texture.

- Do blending with linear colors by reading the sRGB destination values, converting them to linear, blending them with the linear values from the shader, then convert the result to sRGB for storage again.

- Even do correct color space conversions when blitting between framebuffers, for example between a 16-bit floating point texture and an SRGB texture.

- Never give you up.

- Never let you down.

It’s actually possible to request an sRGB default framebuffer for OpenGL when creating the OpenGL context so that you can use it with GL_FRAMEBUFFER_SRGB. Weirdly enough, Nvidia ALWAYS gives you an sRGB-capable default framebuffer even if you ask it not to, but when you check if it’s sRGB it says no. A workaround is to render a 0.5 color pixel with GL_FRAMEBUFFER_SRGB and read it back. If it’s 127, it’s a linear framebuffer. If it’s ~186ish, then it’s sRGB.

Color gradient comparison

A simple linear color gradient rendered to a GL_RGB8 texture, then blitted to the screen with GL_FRAMEBUFFER_SRGB doing an automatic sRGB conversion, ruining the precision:

The same procedure, but with an GL_SRGB8 texture instead (meaning the sRGB conversion is done when writing the 32-bit float pixel color values to the first texture, not when blitting 8-bit values):