[EDIT: now at github.com/philfrei/AudioCue. Some of the following post will be obsolete due to the addition of AudioMixer]

AudioCue was written for Java 2D game programmers. Its purpose is to make it easier to create soundscapes and to handle common sound needs/desires that arise in 2D games. The code consists of one largish class: AudioCue, and a supporting class and interface, both of which are used to support line listening. The classes are provided verbatim and can be pretty much dropped into your project. All the code is core Java–no external libraries are used. The code is free and carries a BSD license.

For 2D game programmers, the main option for sound effects has been to use the core Java Clip. Clip has some distinct limitations:

I. Does not support concurrent playback

A Clip cannot be played concurrently with itself. The are two basic options if, for example, you want to have a rapid fire “bang bang bang” where a new shot is triggered before the sound effect has finished playing:

- stop the cue midway and move the “play head” back to the beginning and restart the cue;

- create and manage many Clips of the same sound.

With AudioCue, it remains possible to start, reposition, and stop the “play head” just as you can with a Clip, but you can also fire-and-forget the cue as often as you like (up to a configured maximum) and the playing instances will all be mixed down to a single output line.

II. Spotty real-time volume fading

A Clip requires the use of a Control class for managing volume changes. The availability of these classes is somewhat machine dependent. Also, the changes only take affect between buffer loop iterations, leading to problems with discontinuity-generated clicks when the setting is asked to vary too quickly.

With AudioCue, there is no reliance on machine-specific Control lines. The changes are processed internally, on a per-sample-frame basis. The change requests are received asynchronously via a setter that lies outside the audio processing loop, and can take affect at any point during the buffer loop iteration. Also, a smoothing algorithm eliminates discontinuities by spreading out change requests over individual sample frames. The result is a fast, responsive, and smooth volume fade.

As a bonus, the same mechanism is used for real-time panning and pitch changes! (See the SlidersTest.jar below for an example.)

FROG POND (soundscape example)

The following jar is an example of a soundscape: frogpond.jar.

You can also hear what this jar does by listening to this wav file: frogpond.wav.

The jar generates a background audio that suggests many frogs croaking in a nearby pond. It runs for 15 seconds. Very little code, and a single asset, is all that is required to create a fairly complex, and ever-varying sound effect.

The jar includes source. Here is the most revelant code:

URL url = this.getClass().getResource("res/frog.wav"); // 1

AudioCue cue = AudioCue.makeStereoCue(url, 4); // 2

cue.open(); // 3

Thread.sleep(100); // 4

// Play for 15 seconds.

long futureStop = System.currentTimeMillis() + 15_000;

while (System.currentTimeMillis() < futureStop)

{

cue.play(0.3 + (Math.random() * 0.5), // 5

1 - Math.random() * 2, // 6

1.02 - Math.random() * 0.08, // 7

0); // 8

Thread.sleep((int)(Math.random() * 750) + 50); // 9

}

Thread.sleep(1000);

cue.close(); // 10

-

You assign a resource in the same way as is done with a Clip or SourceDataLine. Only URL’s are supported, though, as they can identify files packed within jars, unlike the File object.

-

A static “make” method is used, rather than a constructor. This was based on suggestions coming from Joshua Bloch in an article he wrote about API’s (and a desire to make it easy to add, down the road, an additional method for a mono, delay-based panned sound effect). The number “4” in the argument, here, is the maximum number of instances that can be played at one time.

-

This code opens an underlying SourceDataLine for output, and starts sending blank audio. It is possible to change the size of the buffer, to select a specific Mixer output, or specify the thread priority at this stage by using a more complex form of the method:

void open(javax.sound.sampled.Mixer mixer,

int bufferFrames, int threadPriority)

A larger buffer size may be needed as higher numbers of concurrent instances are allowed, in order to prevent drop outs. But a larger buffer can also impact latency. Making this a configurable parameter allows you to balance these needs.

-

I’m not clear if this pause is truly needed, or how much of a pause. I mostly wanted to ensure that the open method has a chance to complete before executing play commands. [TODO: a detail to figure out]

-

Here we set the volume parameter. Permitted range (clamped by AudioCue) is from 0 to 1. For soundscape purposes, the different volumes suggest different distances from the listener to the virtual frog that emits a given croak.

-

Setting the panning. Permitted range (also clamped) is from -1 (100% left) to 1 (100% right) using a volume-based algorithm. Actually, there are currently three different volume based algorithms available–best to consult the API about their specifics and how to select one.

-

Setting the pitch. A slight amount of speed up or slow down of the playback rate helps give the impression that these are many different frogs, some larger, some smaller. The setting is used as a factor. The setting 0.5, for example, halves the playback speed, and 2 doubles it. The permitted values range from 0.125 to 8.

-

This is the loop parameter. Since we only want each croak to play only once and end, we leave it set to zero.

-

A random time interval is generated to space out the occurrence of the croaks.

-

The close method releases the underlying SourceDataLine.

REAL-TIME FADING (example program)

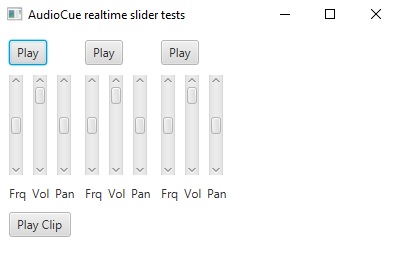

The following jar holds a demonstration of real-time fading: SlidersTest.jar

In this program, three instances from a single AudioCue can be sounded at the same time, and sliders affect pitch, volume and panning for each individual instance, in real time. The bottom has a button that plays the same wav file as a Clip, for comparison. I invite you to compare clarity and latency.

In the source code, you will be able to see how the three instances are managed via int handles.

A couple considerations I should not omit:

One way in which Clip is superior to AudioCue is that it can handle a broad range of audio formats. AudioCue has only had the standard “CD Quality” format enabled.

Why didn’t I support more formats?

A couple of reasons. One is that I have yet to figure out a way to implement this that doesn’t impact the latency or add to processing demands or create considerable additional coding complexity. Another is that it is a straightforward task to use a tool such as Audacity to convert audio files to this format.

As for supporting compression, I didn’t want to involve any external libraries. But for those who do use compression, there is this path. When decompressing your asset, bring it to a state where the data is organized as stereo floats with values ranging from -1 to 1. This is a common form for PCM data. AudioCue can use a float array as a parameter in place of a URL.

I do not know if the libraries that decompress audio assets allow the option of leaving the data as a PCM floats array. AFAIK, Jorbis (Ogg/vorbis compression) converts audio bytes into PCM floats prior to the compression step. For my own purposes, I made a small modification to the source provided for Jorbis (Ogg/Vorbis compression) to intercept the generated buffer of decompressed floats before they are converted back to bytes and output via a SourceDataLine. There may already be a class or method that accomplishes this that is publically available.

I should point out there is another good option for makers of 2D games: the TinySound library. I haven’t taken the time to work out the comparisons. AudioCue is more about the capabilities of individual cues, TinySound mixes all cues down to a single output line, is easy to use, and there are lots of features like support for Ogg/Vorbis and concurrent playback. Definitely worth checking out as an option.

This code is to a large extent a way I hope to give back to Java-gaming.org, for all the help this community has provided me over the years! I hope it proves useful, especially for new game programmers trying out Java.

I’ll do my best to answer questions and make corrections to the code, when the many typos and questionable design and coding choices are pointed out.

Thanks so much. I’ll ask if anything pops up.

Thanks so much. I’ll ask if anything pops up.

It’s the name that should make most sense to someone coming to your codebase from scratch. Unlike javax Mixer, which is badly named given most of the mixers don’t actually mix anything.

It’s the name that should make most sense to someone coming to your codebase from scratch. Unlike javax Mixer, which is badly named given most of the mixers don’t actually mix anything.